问题的提出

公司有一个用户行为分析系统,可以记录用户在使用公司产品过程中的一系列操作轨迹,便于分析产品使用情况以便优化产品 UI 界面布局。这套系统有点类似于 Google Analyse(GA),所不同的是,为了让用户把产品用起来,公司会将用户行为与优惠活动联系起来,例如购买产品后一段时间内如果使用时长达到一定标准,就能从销售那里领取小礼品,初衷是为了让用户把产品用起来。可是这个活动一经推出,我这边电话就被打爆了,因为经常有用户感觉自己在用产品了,但是销售给他反馈的时长信息却没有增长。于是实施同事会要求我们去排查问题,可能很多情况下,就是用户没联网、或者防火墙设置的太严格了导致数据上不来、甚至是后台服务挂了导致数据没及时分析……其实 90% 以上的问题和客户端没关系,而是后台在某个环节丢失了数据,但是作为查问题的第一个环节,客户端开发往往会被顶到前线充当 call center 的角色,浪费大量个人时间不说,效率也是极低的。于是自然而然就会想到,能不能做一个分析工具,自动从日志里提出关键数据,做成直观的图表展示给实施人员,可以一眼就能定位出是客户端还是后台的问题,从而达成初步排 (甩) 查 (锅) 的目的?

问题的解决

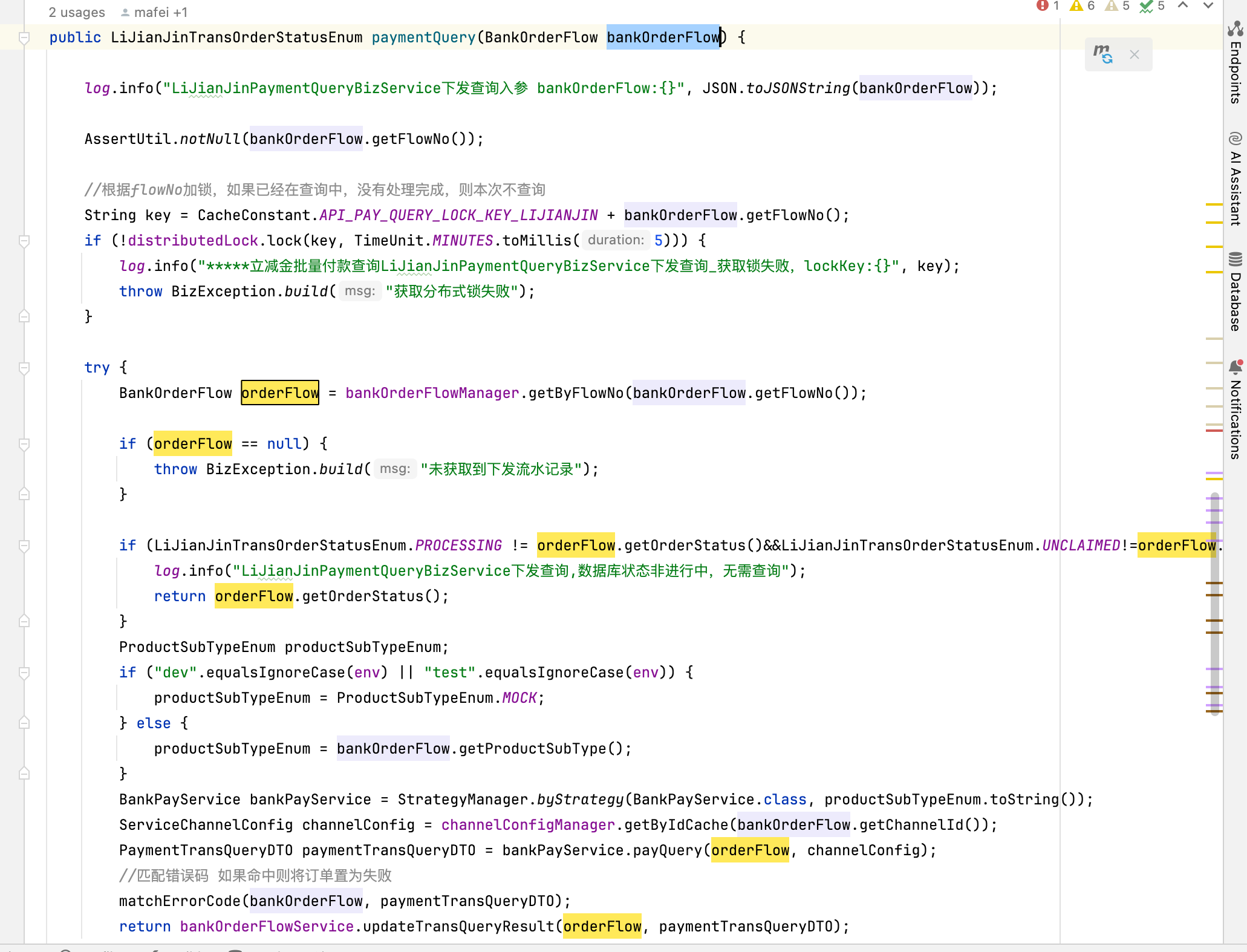

要能从日志中提取出行为数据(专业术语称为埋点)并根据时间顺序绘制一幅活跃图的根基,是日志中要有相应的记录,巧的是,之前为了排查问题,已经在日志中输出了大量这样的信息:

2020-08-31 10:15:12.531 2248 2564 [1] ########################################### 2020-08-31 10:15:12.531 2248 2564 [2] Service starting ! 2020-08-31 10:15:14.668 2248 2564 [1] collecting OS information... 2020-08-31 10:15:14.949 2248 2564 [1] TimeZone: 480 2020-08-31 10:15:14.949 2248 2564 [1] CountryCode: 86 2020-08-31 10:15:14.949 2248 2564 [1] OSLanugage Name: 1033 2020-08-31 10:15:14.980 2248 2564 [1] Version: 6.1.7601 2020-08-31 10:15:14.980 2248 2564 [1] OSArchitectur: 64-bit 2020-08-31 10:15:14.980 2248 2564 [1] OperatingSystemSKU: 1 2020-08-31 10:15:14.980 2248 2564 [2] Start to init Db. 2020-08-31 10:15:14.980 2248 2564 [2] local device id: 7aaa811b-f720-46b9-bdf3-e6b186cdcc77 2020-08-31 10:15:14.980 2248 2564 [2] sqlite3 database threadsafe = 1 2020-08-31 10:15:15.043 2248 2564 [2] Init Db done. 2020-08-31 10:15:15.043 2248 2564 [2] Start Event Worker. 2020-08-31 10:15:15.043 2248 2564 [2] Start App Worker. 2020-08-31 10:15:15.043 2248 2564 [2] Start Trace Worker. 2020-08-31 10:15:15.043 2248 2564 [2] HB Started. 2020-08-31 10:15:15.043 2248 2564 [1] Local Server Start work done. 2020-08-31 10:15:15.043 2248 3888 [2] Local Server started. 2020-08-31 10:15:15.043 2248 2564 [2] delete files: C:\ProgramData\Glodon\GUX\GCI\\GUX_*.session 2020-08-31 10:15:15.043 2248 2564 [2] start to read strategy from DB 2020-08-31 10:15:15.043 2248 2564 [2] add item 'GMD2017' to aggregation list 2020-08-31 10:15:15.043 2248 2564 [2] add item 'GMJ2017' to aggregation list 2020-08-31 10:15:15.043 2248 2564 [2] add item 'GFYCM2017' to aggregation list 2020-08-31 10:15:15.043 2248 2564 [2] add item 'GAQ2017' to aggregation list 2020-08-31 10:15:15.043 2248 2564 [2] add item 'GJH2017' to aggregation list 2020-08-31 10:15:15.043 2248 2564 [2] add item 'GMJ' to aggregation list 2020-08-31 10:15:15.043 2248 2564 [2] add item 'GBS2017' to aggregation list 2020-08-31 10:15:15.043 2248 2564 [2] add item 'GBCB2017' to aggregation list 2020-08-31 10:15:15.043 2248 2564 [2] add item 'GFYC2017' to aggregation list 2020-08-31 10:15:15.043 2248 2564 [2] add item 'GSJ2017' to aggregation list 2020-08-31 10:15:15.043 2248 2564 [2] add item 'GFYC' to aggregation list 2020-08-31 10:15:15.043 2248 2564 [2] add item 'GFYQ' to aggregation list 2020-08-31 10:15:15.043 2248 2564 [2] Read Local App Strategy Done. 2020-08-31 10:15:15.043 2248 2564 [2] Start GMTService done.Version=2.11.0.1777 2020-08-31 10:15:15.043 2248 3724 [2] Adjust Time thread start. 2020-08-31 10:15:15.058 2248 3076 [2] gcm started 2020-08-31 10:15:15.058 2248 3076 [2] Start to Get Strategy. 2020-08-31 10:15:15.058 2248 1748 [2] GUX Online failed, code=4 2020-08-31 10:15:15.089 2248 424 [2] GUX Online succeed. 2020-08-31 10:15:15.121 2248 3076 [1] http get: /v3/server_status?type=102&data_version=2.4, result 200 2020-08-31 10:15:15.121 2248 3076 [4] WinHttpQueryHeaders failed, flag = 0x20000005, errno = 12150 2020-08-31 10:15:15.121 2248 3076 [2] query content length failed, try with transfer-encoding.. 2020-08-31 10:15:15.121 2248 3076 [2] Start to process received message 102 2020-08-31 10:15:15.121 2248 3076 [2] Recv POST strategy. 2020-08-31 10:15:15.121 2248 3076 [2] start post: 1, period: 300 2020-08-31 10:15:15.401 2248 3076 [1] http get: /v3/server_status?type=103&data_version=2.4, result 200 2020-08-31 10:15:15.401 2248 3076 [4] WinHttpQueryHeaders failed, flag = 0x20000005, errno = 12150 2020-08-31 10:15:15.401 2248 3076 [2] query content length failed, try with transfer-encoding.. 2020-08-31 10:15:15.401 2248 3076 [2] Start to process received message 103 2020-08-31 10:15:15.401 2248 3076 [2] Recv TIME strategy. 2020-08-31 10:15:15.401 2248 3076 [2] Time shift: 0 2020-08-31 10:15:15.464 2248 3076 [1] http get: /v3/server_status?type=100&data_version=2.4&md5=ba16886ef2cf522245ad6f0dd20380c958e834ff7bb54d7a2d46fbf416806462, result 200 2020-08-31 10:15:15.464 2248 3076 [4] WinHttpQueryHeaders failed, flag = 0x20000005, errno = 12150 2020-08-31 10:15:15.464 2248 3076 [2] query content length failed, try with transfer-encoding.. 2020-08-31 10:15:15.776 2248 3076 [2] Start to process received message 100 2020-08-31 10:15:15.776 2248 3076 [2] Recv APP strategy. 2020-08-31 10:15:15.776 2248 3076 [2] GrandDog [140]: 1, 1, 0 2020-08-31 10:15:15.776 2248 3076 [2] CubicostTRB [178]: 1, 1, 0 2020-08-31 10:15:15.776 2248 3076 [2] GTJ2017 [78]: 1, 1, 0 2020-08-31 10:15:15.776 2248 3076 [2] GMD2017 [137]: 1, 1, 1 2020-08-31 10:15:15.776 2248 3076 [2] GDraw [180]: 1, 1, 0 2020-08-31 10:15:15.776 2248 3076 [2] GLC [276]: 1, 1, 0 2020-08-31 10:15:15.776 2248 3076 [2] GUX [164]: 1, 1, 0 2020-08-31 10:15:15.776 2248 3076 [2] GCCP5 [67]: 1, 1, 0 2020-08-31 10:15:15.776 2248 3076 [2] GCCP6 [261]: 1, 1, 0 2020-08-31 10:15:15.776 2248 3076 [2] TME [17]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GWS [25]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] MOZIDIFFER [36]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GMJ [40]: 1, 0, 1 2020-08-31 10:15:15.776 2248 3076 [2] GCL2013 [44]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GGJ2013 [45]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] MD_GMA [56]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GDQ2015 [75]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GQI2017 [76]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GJG2015 [77]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GMP2016 [80]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] Revit2GFC4GMP [83]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GTJ2017CAD [100]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GYZB2017 [112]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] BIM5D_PC [114]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GFYCM [115]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GBCB [125]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] CubicostTAS [128]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GMD [129]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GAQ2017 [131]: 1, 0, 1 2020-08-31 10:15:15.776 2248 3076 [2] GBCB2017 [132]: 1, 0, 1 2020-08-31 10:15:15.776 2248 3076 [2] GBS2017 [133]: 1, 0, 1 2020-08-31 10:15:15.776 2248 3076 [2] GFYC2017 [134]: 1, 0, 1 2020-08-31 10:15:15.776 2248 3076 [2] GFYCM2017 [135]: 1, 0, 1 2020-08-31 10:15:15.776 2248 3076 [2] GMJ2017 [136]: 1, 0, 1 2020-08-31 10:15:15.776 2248 3076 [2] GSJ2017 [138]: 1, 0, 1 2020-08-31 10:15:15.776 2248 3076 [2] GJH2017 [139]: 1, 0, 1 2020-08-31 10:15:15.776 2248 3076 [2] TeamViewer [142]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] ZPert [148]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GBS [160]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GIR_C [162]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] TBQ2017 [163]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GYJC2017 [167]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GSXGZT2016 [177]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] TBQD [181]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] TTED [182]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] TCFD [183]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GSCApp [188]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GFYC [200]: 1, 0, 1 2020-08-31 10:15:15.776 2248 3076 [2] GDQ2017 [207]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GO [217]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] AppGbmp [218]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GQI2018 [222]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GDS2017 [226]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GLDTCS [228]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] TenderGo [231]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GDQ2018 [232]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] SectionManual [233]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] BeamGo [234]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GJG2018 [235]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] RevitViewer [236]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] BIM5D_PC_TEST [237]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] BIM5D_PC_TRIAL [238]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GEC5 [239]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GFYQ [240]: 1, 0, 1 2020-08-31 10:15:15.776 2248 3076 [2] RoadDesigner [241]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] CECS100G [242]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GBES [243]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] Ceshi [244]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] dpUpdate [245]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GFY4 [246]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GGPT [248]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GMA2020 [249]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] JZYK [250]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GVB5 [251]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GHW5 [252]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GUp [253]: 1, 0, 1 2020-08-31 10:15:15.776 2248 3076 [2] BIM_COST [254]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GICP5 [255]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] bim5d_basic [256]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GWH5 [257]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GFY4_2019 [258]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GDD2019 [259]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GCCP5_ShanDong_64 [260]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GSC6 [262]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GCCP6_WP [263]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GEB6 [264]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GSH6 [265]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GTech2019 [266]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GPC5 [267]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GTJ2021 [268]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GDE2019 [269]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] CubicostTIO [270]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GCA5 [271]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GLC5 [272]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GMT5 [273]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GCN5 [274]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GHC5 [275]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GVB6 [277]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GJG2021 [278]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GJG [279]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GAP [280]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GSTP [281]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] TRS2021 [283]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] TMEC [284]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] CubicostTMEC [285]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GGF5 [286]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GRE5 [287]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [2] GA_CloudPlugin [310]: 1, 0, 0 2020-08-31 10:15:15.776 2248 3076 [1] Parse App-Id Done. 2020-08-31 10:15:15.869 2248 3076 [2] start to read strategy from DB 2020-08-31 10:15:15.869 2248 3076 [2] add item 'GMD2017' to aggregation list 2020-08-31 10:15:15.869 2248 3076 [2] add item 'GMJ' to aggregation list 2020-08-31 10:15:15.869 2248 3076 [2] add item 'GAQ2017' to aggregation list 2020-08-31 10:15:15.869 2248 3076 [2] add item 'GBCB2017' to aggregation list 2020-08-31 10:15:15.869 2248 3076 [2] add item 'GBS2017' to aggregation list 2020-08-31 10:15:15.869 2248 3076 [2] add item 'GFYC2017' to aggregation list 2020-08-31 10:15:15.869 2248 3076 [2] add item 'GFYCM2017' to aggregation list 2020-08-31 10:15:15.869 2248 3076 [2] add item 'GMJ2017' to aggregation list 2020-08-31 10:15:15.869 2248 3076 [2] add item 'GSJ2017' to aggregation list 2020-08-31 10:15:15.869 2248 3076 [2] add item 'GJH2017' to aggregation list 2020-08-31 10:15:15.869 2248 3076 [2] add item 'GFYC' to aggregation list 2020-08-31 10:15:15.869 2248 3076 [2] add item 'GFYQ' to aggregation list 2020-08-31 10:15:15.869 2248 3076 [2] add item 'GUp' to aggregation list 2020-08-31 10:15:15.869 2248 3076 [2] Read Local App Strategy Done. 2020-08-31 10:15:15.947 2248 3076 [1] http get: /v3/server_status?type=101&data_version=2.4, result 200 2020-08-31 10:15:15.947 2248 3076 [4] WinHttpQueryHeaders failed, flag = 0x20000005, errno = 12150 2020-08-31 10:15:15.947 2248 3076 [2] query content length failed, try with transfer-encoding.. 2020-08-31 10:15:16.275 2248 3076 [2] Start to process received message 101 2020-08-31 10:15:16.275 2248 3076 [2] Recv KEY strategy. 2020-08-31 10:15:16.275 2248 2984 [1] start posting local information... 2020-08-31 10:15:16.275 2248 2984 [1] User: yunh, Domain: WIN-AS1KN0S7NAJ 2020-08-31 10:15:16.431 2248 2984 [1] User Profile: 2020-08-31 10:15:16.525 2248 2984 [2] load user profile OK 2020-08-31 10:15:16.525 2248 2984 [2] impersonate logged on user OK 2020-08-31 10:15:16.525 2248 2984 [2] revert to self OK 2020-08-31 10:15:16.525 2248 2984 [2] dpi user = yunh, current = 96 2020-08-31 10:15:16.805 2248 2984 [2] dpi infact = 96 2020-08-31 10:15:16.805 2248 2984 [2] dpi scale = 100% 2020-08-31 10:15:16.805 2248 2984 [4] Excute SQL Error: [19] constraint failed SQL=insert into DpiSettingTbl (user, infact, setting, scale) values('yunh','96','96','100') 2020-08-31 10:15:16.805 2248 2984 [3] insert dpi setting for yunh failed, try updating 2020-08-31 10:15:16.868 2248 2984 [1] 2020-08-31 10:15:16.868 2248 2984 [1] environment strings: 2020-08-31 10:15:16.868 2248 2984 [1] ALLUSERSPROFILE=C:\ProgramData 2020-08-31 10:15:16.868 2248 2984 [1] APPDATA=C:\Users\yunh\AppData\Roaming 2020-08-31 10:15:16.868 2248 2984 [1] CommonProgramFiles=C:\Program Files (x86)\Common Files 2020-08-31 10:15:16.868 2248 2984 [1] CommonProgramFiles(x86)=C:\Program Files (x86)\Common Files 2020-08-31 10:15:16.868 2248 2984 [1] CommonProgramW6432=C:\Program Files\Common Files 2020-08-31 10:15:16.868 2248 2984 [1] COMPUTERNAME=WIN-AS1KN0S7NAJ 2020-08-31 10:15:16.868 2248 2984 [1] ComSpec=C:\Windows\system32\cmd.exe 2020-08-31 10:15:16.868 2248 2984 [1] FP_NO_HOST_CHECK=NO 2020-08-31 10:15:16.868 2248 2984 [1] GLODON_COMMON_APPDATA=C:\ProgramData 2020-08-31 10:15:16.868 2248 2984 [1] GLODON_COMMON_DESKTOP=C:\Users\Public\Desktop 2020-08-31 10:15:16.868 2248 2984 [1] GLODON_COMMON_DOCUMENT=C:\Users\Public\Documents 2020-08-31 10:15:16.868 2248 2984 [1] GLODON_COMMON_FILES_32=C:\Program Files (x86)\Common Files 2020-08-31 10:15:16.868 2248 2984 [1] GLODON_COMMON_FILES_64=C:\Program Files\Common Files 2020-08-31 10:15:16.868 2248 2984 [1] GLODON_PROGRAM_FILES_32=C:\Program Files (x86) 2020-08-31 10:15:16.868 2248 2984 [1] GLODON_PROGRAM_FILES_64=C:\Program Files 2020-08-31 10:15:16.868 2248 2984 [1] GLODON_SYSTEM_32=C:\Windows\SysWOW64 2020-08-31 10:15:16.868 2248 2984 [1] GLODON_SYSTEM_64=C:\Windows\system32 2020-08-31 10:15:16.868 2248 2984 [1] GLODON_SYSTEM_DRIVE=C: 2020-08-31 10:15:16.868 2248 2984 [1] GLODON_WINDOWS_DIR=C:\Windows 2020-08-31 10:15:16.868 2248 2984 [1] HOMEDRIVE=C: 2020-08-31 10:15:16.868 2248 2984 [1] HOMEPATH=\Users\yunh 2020-08-31 10:15:16.868 2248 2984 [1] LOCALAPPDATA=C:\Users\yunh\AppData\Local 2020-08-31 10:15:16.868 2248 2984 [1] LOGONSERVER=\\WIN-AS1KN0S7NAJ 2020-08-31 10:15:16.868 2248 2984 [1] NUMBER_OF_PROCESSORS=1 2020-08-31 10:15:16.868 2248 2984 [1] OS=Windows_NT 2020-08-31 10:15:16.868 2248 2984 [1] Path=C:\Windows\system32;C:\Windows;C:\Windows\System32\Wbem;C:\Windows\System32\WindowsPowerShell\v1.0\ 2020-08-31 10:15:16.868 2248 2984 [1] PATHEXT=.COM;.EXE;.BAT;.CMD;.VBS;.VBE;.JS;.JSE;.WSF;.WSH;.MSC 2020-08-31 10:15:16.868 2248 2984 [1] PROCESSOR_ARCHITECTURE=AMD64 2020-08-31 10:15:16.868 2248 2984 [1] PROCESSOR_IDENTIFIER=Intel64 Family 6 Model 60 Stepping 3, GenuineIntel 2020-08-31 10:15:16.868 2248 2984 [1] PROCESSOR_LEVEL=6 2020-08-31 10:15:16.868 2248 2984 [1] PROCESSOR_REVISION=3c03 2020-08-31 10:15:16.868 2248 2984 [1] ProgramData=C:\ProgramData 2020-08-31 10:15:16.868 2248 2984 [1] ProgramFiles=C:\Program Files (x86) 2020-08-31 10:15:16.868 2248 2984 [1] ProgramFiles(x86)=C:\Program Files (x86) 2020-08-31 10:15:16.868 2248 2984 [1] ProgramW6432=C:\Program Files 2020-08-31 10:15:16.868 2248 2984 [1] PSModulePath=C:\Windows\system32\WindowsPowerShell\v1.0\Modules\ 2020-08-31 10:15:16.868 2248 2984 [1] PUBLIC=C:\Users\Public 2020-08-31 10:15:16.868 2248 2984 [1] SystemDrive=C: 2020-08-31 10:15:16.868 2248 2984 [1] SystemRoot=C:\Windows 2020-08-31 10:15:16.868 2248 2984 [1] TEMP=C:\Users\yunh\AppData\Local\Temp 2020-08-31 10:15:16.868 2248 2984 [1] TMP=C:\Users\yunh\AppData\Local\Temp 2020-08-31 10:15:16.868 2248 2984 [1] USERDOMAIN=WIN-AS1KN0S7NAJ 2020-08-31 10:15:16.868 2248 2984 [1] USERNAME=yunh 2020-08-31 10:15:16.868 2248 2984 [1] USERPROFILE=C:\Users\yunh 2020-08-31 10:15:16.868 2248 2984 [1] windir=C:\Windows 2020-08-31 10:15:16.868 2248 2984 [1] windows_tracing_flags=3 2020-08-31 10:15:16.868 2248 2984 [1] windows_tracing_logfile=C:\BVTBin\Tests\installpackage\csilogfile.log 2020-08-31 10:15:16.868 2248 2984 [1] 2020-08-31 10:15:21.673 2248 2984 [2] first got hwid: {C4F752B9-EF31-580A-B75C-67E300000000} 2020-08-31 10:15:21.673 2248 2984 [2] first got ssid: 2020-08-31 10:15:21.673 2248 2984 [1] local info:{ "metrics": { "os": "Windows", "os_version": "7", "os_version1": "6.1.7601", "os_edition": "Ultimate Edition", "dev_type": "PC", "resolution": "1920x1080", "sysbits": "64", "cpu_sum": "Intel(R) Core(TM) i7-4790 CPU @ 3.60GHz 3.60GHz", "total_mem": "2048MB", "language": "English (United States)", "pctype": "unknown", "language_id": "1033", "timezone": "8", "dpiscale": "100" }, "mem": { "total_mem": "2048MB" }, "videocards": [ { "name": "VMware SVGA 3D", "manufacturer": "VMware, Inc.", "chipset": "VMware Virtual SVGA 3D Graphics Adapter", "dac_type": "n\/a", "memory": "1024MB", "inuse": "1", "pixelbits": "32", "monitor_count": "1", "driverversion": "8.15.1.33", "driverdate": "20151016000000.000000-000", "pnpdeviceid": "PCI\\VEN_15AD&DEV_0405&SUBSYS_040515AD&REV_00\\3&2B8E0B4B&0&78", "caption": "VMware SVGA 3D", "frequency": "60Hz", "resolution": "1920x1080" } ], "cpu": { "name": "Intel(R) Core(TM) i7-4790 CPU @ 3.60GHz", "manufacturer": "GenuineIntel", "physicalcores": "1", "logicalcores": "1", "frequency": "3.60GHz", "address_width": "64", "voltage": "33" }, "baseboard": { "manufacturer": "Intel Corporation", "product": "440BX Desktop Reference Platform", "sn": "None" }, "dpisetting": [ { "user": "yunh", "infact": "96", "setting": "96", "scale": "100" } ], "msg_type": "7", "app_name": "GUX", "app_version": "2.11.0.1777", "device_id": "7aaa811b-f720-46b9-bdf3-e6b186cdcc77", "mt_devid": "{C4F752B9-EF31-580A-B75C-67E300000000}", "ssid": "", "timestamp": "1598840121673", "sessionid": "", "extra": "" } 2020-08-31 10:15:21.673 2248 2984 [2] Read device info succeed. 2020-08-31 10:15:21.673 2248 2984 [2] local hardware changed, prepare to post system information 2020-08-31 10:15:21.673 2248 2984 [2] first got ssid: 2020-08-31 10:15:21.766 2248 2984 [1] http post: /v3/do, result 200 2020-08-31 10:15:21.766 2248 2984 [1] 6 : finish 2020-08-31 10:15:21.766 2248 2984 [2] Post local information succeed.. 2020-08-31 10:15:21.766 2248 2984 [1] collectLocalInfoData end... 2020-08-31 10:15:44.650 2248 3888 [2] local client accepted. 2020-08-31 10:15:44.681 2248 2936 [2] Send sync msg to Client, socketNo=1, len=46 2020-08-31 10:15:44.681 2248 2936 [2] GDraw start, appid = B84c955afdfe63ae1acf0e1a45f6dcc9e4fb64a3, inst = 1473 2020-08-31 10:15:44.697 2248 3800 [2] add event 3 (1) 2020-08-31 10:15:44.697 2248 3360 [4] Excute SQL Error: [21] library routine called out of sequence SQL=insert into B84c955afdfe63ae1acf0e1a45f6dcc9e4fb64a3(type, key , count , data, timestamp ) values('5','启动','1','{"dognum":"","fname":"Æô¶¯","fngroup":"VisDraw","gid":"","pcode":"-110000","query":{},"sessionid":"3680"} ','1598840144697') 2020-08-31 10:15:44.697 2248 3360 [1] add action GDraw.启动 (0) 2020-08-31 10:15:44.697 2248 3360 [2] create action table B84c955afdfe63ae1acf0e1a45f6dcc9e4fb64a3 (1). 2020-08-31 10:15:44.712 2248 3360 [2] insert 1 record into db by transaction elapsed 10 ms 2020-08-31 10:15:44.837 2248 3360 [1] add action GDraw.新建文件 (1) 2020-08-31 10:15:44.837 2248 3360 [2] insert 1 record into db by transaction elapsed 1 ms 2020-08-31 10:15:45.071 2248 3076 [2] Save Timer Expired.Start to Save Aggregation Data. 2020-08-31 10:15:48.254 2248 3360 [1] add action GDraw.添加弧 (1) 2020-08-31 10:15:48.254 2248 3360 [2] insert 1 record into db by transaction elapsed 2 ms 2020-08-31 10:15:53.683 2248 3360 [1] add action GDraw.添加长方形 (1) 2020-08-31 10:15:53.683 2248 3360 [2] insert 1 record into db by transaction elapsed 2 ms 2020-08-31 10:15:57.349 2248 3360 [1] add action GDraw.添加圆 (1) 2020-08-31 10:15:57.349 2248 3360 [2] insert 1 record into db by transaction elapsed 2 ms 2020-08-31 10:16:01.389 2248 1992 [2] GLC heart beat timeout, appid = B21170b9e920c9c0fb57374ee21d0b71af1adf3d, inst = 2 2020-08-31 10:16:01.389 2248 1992 [2] GDraw heart beat timeout, appid = Bb3e5dfebfa0f02d987a93865bc9aed3e81dee09, inst = 3 2020-08-31 10:16:01.389 2248 3800 [2] add event 4 (1) 2020-08-31 10:16:01.389 2248 3800 [2] add event 4 (1) 2020-08-31 10:16:03.854 2248 3360 [1] add action GDraw.添加圆 (1) 2020-08-31 10:16:03.869 2248 3360 [2] insert 1 record into db by transaction elapsed 3 ms 2020-08-31 10:16:10.843 2248 3360 [1] add action GDraw.添加椭圆 (1) 2020-08-31 10:16:10.843 2248 3360 [2] insert 1 record into db by transaction elapsed 3 ms 2020-08-31 10:16:14.290 2248 3360 [1] add action GDraw.添加多边形 (1) 2020-08-31 10:16:14.290 2248 3360 [2] insert 1 record into db by transaction elapsed 2 ms 2020-08-31 10:16:19.251 2248 3360 [1] add action GDraw.添加文本 (1) 2020-08-31 10:16:19.251 2248 3360 [2] insert 1 record into db by transaction elapsed 3 ms 2020-08-31 10:16:24.274 2248 3360 [1] add action GDraw.添加直线 (1) 2020-08-31 10:16:24.274 2248 3360 [2] insert 1 record into db by transaction elapsed 3 ms 2020-08-31 10:16:37.363 2248 3360 [1] add action GDraw.设置文本 (1) 2020-08-31 10:16:37.378 2248 3360 [2] insert 1 record into db by transaction elapsed 3 ms 2020-08-31 10:16:37.378 2248 3360 [1] add action GDraw.设置文本 (1) 2020-08-31 10:16:37.378 2248 3360 [1] add action GDraw.设置文本 (1) 2020-08-31 10:16:37.394 2248 3360 [2] insert 2 record into db by transaction elapsed 17 ms 2020-08-31 10:16:41.325 2248 3360 [1] add action GDraw.置底椭圆 (1) 2020-08-31 10:16:41.325 2248 3360 [1] add action GDraw.置底文本 (1) 2020-08-31 10:16:41.325 2248 3360 [1] add action GDraw.置底直线 (1) 2020-08-31 10:16:41.341 2248 3360 [2] insert 3 record into db by transaction elapsed 3 ms 2020-08-31 10:16:45.943 2248 3360 [1] add action GDraw.移动椭圆 (1) 2020-08-31 10:16:45.943 2248 3360 [2] insert 1 record into db by transaction elapsed 2 ms 2020-08-31 10:16:49.390 2248 3360 [1] add action GDraw.删除圆 (1) 2020-08-31 10:16:49.390 2248 3360 [2] insert 1 record into db by transaction elapsed 2 ms 2020-08-31 10:16:52.963 2248 3360 [1] add action GDraw.删除圆 (1) 2020-08-31 10:16:52.978 2248 3360 [2] insert 1 record into db by transaction elapsed 3 ms 2020-08-31 10:16:55.162 2248 3360 [1] add action GDraw.粘贴圆 (1) 2020-08-31 10:16:55.162 2248 3360 [2] insert 1 record into db by transaction elapsed 2 ms 2020-08-31 10:16:59.873 2248 3360 [1] add action GDraw.调整圆 (1) 2020-08-31 10:16:59.873 2248 3360 [2] insert 1 record into db by transaction elapsed 2 ms 2020-08-31 10:17:04.475 2248 3360 [1] add action GDraw.调整长方形 (1) 2020-08-31 10:17:04.475 2248 3360 [2] insert 1 record into db by transaction elapsed 4 ms 2020-08-31 10:17:07.798 2248 3360 [1] add action GDraw.调整多边形 (1) 2020-08-31 10:17:07.798 2248 3360 [2] insert 1 record into db by transaction elapsed 3 ms 2020-08-31 10:17:11.511 2248 3360 [1] add action GDraw.调整弧 (1) 2020-08-31 10:17:11.511 2248 3360 [2] insert 1 record into db by transaction elapsed 2 ms 2020-08-31 10:17:16.019 2248 3360 [1] add action GDraw.调整直线 (1) 2020-08-31 10:17:16.035 2248 3360 [2] insert 1 record into db by transaction elapsed 3 ms 2020-08-31 10:17:44.973 2248 3360 [1] add action GDraw.设置文本 (1) 2020-08-31 10:17:44.973 2248 3360 [1] add action GDraw.设置文本 (1) 2020-08-31 10:17:44.973 2248 3360 [1] add action GDraw.设置文本 (1) 2020-08-31 10:17:44.989 2248 3360 [2] insert 3 record into db by transaction elapsed 7 ms 2020-08-31 10:17:48.140 2248 3360 [1] add action GDraw.调整文本 (1) 2020-08-31 10:17:48.156 2248 3360 [2] insert 1 record into db by transaction elapsed 3 ms 2020-08-31 10:17:55.924 2248 3360 [1] add action GDraw.删除文本 (1) 2020-08-31 10:17:55.924 2248 3360 [2] insert 1 record into db by transaction elapsed 3 ms 2020-08-31 10:18:07.188 2248 3360 [1] add action GDraw.保存文件 (1) 2020-08-31 10:18:07.188 2248 3360 [2] insert 1 record into db by transaction elapsed 4 ms 2020-08-31 10:18:08.326 2248 3360 [1] add action GDraw.关闭文件 (1) 2020-08-31 10:18:08.326 2248 3360 [2] insert 1 record into db by transaction elapsed 2 ms 2020-08-31 10:18:08.576 2248 3888 [4] local client disconnected: End of file 2020-08-31 10:18:08.576 2248 2936 [2] GDraw stop, appid = B84c955afdfe63ae1acf0e1a45f6dcc9e4fb64a3, inst = 1473 2020-08-31 10:18:08.576 2248 3360 [1] add action GDraw.退出 (1) 2020-08-31 10:18:08.576 2248 3360 [2] insert 1 record into db by transaction elapsed 3 ms 2020-08-31 10:18:08.576 2248 3800 [2] add event 4 (1) ……

View Code

可以看到关键日志的格式类似如下:

2020-08-31 10:17:48.140 2248 3360 [1] add action GDraw.调整文本 (1)

分别对应的字段是:

日期 时间(带毫秒) 进程号 线程号 [日志级别] add action 产品标识.操作 (入队结果)

所以我们大体可以分两步完成这个工作,一是提取关键日志,二是根据数据绘制活跃图。

提取日志数据

因为日志里夹杂了太多不相关的数据,我们需要先进行一波清洗,这个是 sed 的拿手好戏:

$ sed -n '/add action .*\..*/p' gux_log.1.txt

2020-08-31 10:15:44.697 2248 3360 [1] add action GDraw.启动 (0)

2020-08-31 10:15:44.837 2248 3360 [1] add action GDraw.新建文件 (1)

2020-08-31 10:15:48.254 2248 3360 [1] add action GDraw.添加弧 (1)

2020-08-31 10:15:53.683 2248 3360 [1] add action GDraw.添加长方形 (1)

2020-08-31 10:15:57.349 2248 3360 [1] add action GDraw.添加圆 (1)

2020-08-31 10:16:03.854 2248 3360 [1] add action GDraw.添加圆 (1)

2020-08-31 10:16:10.843 2248 3360 [1] add action GDraw.添加椭圆 (1)

2020-08-31 10:16:14.290 2248 3360 [1] add action GDraw.添加多边形 (1)

2020-08-31 10:16:19.251 2248 3360 [1] add action GDraw.添加文本 (1)

2020-08-31 10:16:24.274 2248 3360 [1] add action GDraw.添加直线 (1)

2020-08-31 10:16:37.363 2248 3360 [1] add action GDraw.设置文本 (1)

2020-08-31 10:16:37.378 2248 3360 [1] add action GDraw.设置文本 (1)

2020-08-31 10:16:37.378 2248 3360 [1] add action GDraw.设置文本 (1)

2020-08-31 10:16:41.325 2248 3360 [1] add action GDraw.置底椭圆 (1)

2020-08-31 10:16:41.325 2248 3360 [1] add action GDraw.置底文本 (1)

2020-08-31 10:16:41.325 2248 3360 [1] add action GDraw.置底直线 (1)

2020-08-31 10:16:45.943 2248 3360 [1] add action GDraw.移动椭圆 (1)

2020-08-31 10:16:49.390 2248 3360 [1] add action GDraw.删除圆 (1)

2020-08-31 10:16:52.963 2248 3360 [1] add action GDraw.删除圆 (1)

2020-08-31 10:16:55.162 2248 3360 [1] add action GDraw.粘贴圆 (1)

2020-08-31 10:16:59.873 2248 3360 [1] add action GDraw.调整圆 (1)

2020-08-31 10:17:04.475 2248 3360 [1] add action GDraw.调整长方形 (1)

2020-08-31 10:17:07.798 2248 3360 [1] add action GDraw.调整多边形 (1)

2020-08-31 10:17:11.511 2248 3360 [1] add action GDraw.调整弧 (1)

2020-08-31 10:17:16.019 2248 3360 [1] add action GDraw.调整直线 (1)

2020-08-31 10:17:44.973 2248 3360 [1] add action GDraw.设置文本 (1)

2020-08-31 10:17:44.973 2248 3360 [1] add action GDraw.设置文本 (1)

2020-08-31 10:17:44.973 2248 3360 [1] add action GDraw.设置文本 (1)

2020-08-31 10:17:48.140 2248 3360 [1] add action GDraw.调整文本 (1)

2020-08-31 10:17:55.924 2248 3360 [1] add action GDraw.删除文本 (1)

2020-08-31 10:18:07.188 2248 3360 [1] add action GDraw.保存文件 (1)

2020-08-31 10:18:08.326 2248 3360 [1] add action GDraw.关闭文件 (1)

2020-08-31 10:18:08.576 2248 3360 [1] add action GDraw.退出 (1)

2020-08-31 10:20:14.546 2248 3360 [1] add action GDraw.timer_duration (0)

接下来要从清洗后的日志中提取日期、时间、进程号、产品标识、操作 5 个字段到该产品标识对应的文件中,这个是 awk 的分内活儿:

sed -n '/add action *.*/p' gux_log.1.txt | awk -F "[. ]+" '{ print $1,$2,$4,$9,$10 >> "plot/"$9".txt"; a[$9]++ } END { for (i in a) print i " : " a[i] }'

因为产品标识与操作是用 '.' 号分隔的,所以要在分隔符中加入点号 (默认为空格),不过这样一来,就会把时间和毫秒也分成两个字段,所以我们要提取的字段下标(从 1 开始)就分别变成了日期(1)、时间(2)、进程号(4)、产品标识(9)、操作(10)。提取到的数据行会写入对应的产品标识命名的文件中 ("plot/GDraw.txt"),注意这里的 $9 不能包含在引号中,否则不起作用 (awk 语法补习一下)。并递增对应的产品标识埋点数据,最后将所有的产品统计信息打印出来:

GDraw : 34

同时在 plot 子目录下可以找到以 GDraw 命名的数据文件 (GDraw.txt):

$ cat plot/GDraw.txt 2020-08-31 10:15:44 2248 GDraw 启动 2020-08-31 10:15:44 2248 GDraw 新建文件 2020-08-31 10:15:48 2248 GDraw 添加弧 2020-08-31 10:15:53 2248 GDraw 添加长方形 2020-08-31 10:15:57 2248 GDraw 添加圆 2020-08-31 10:16:03 2248 GDraw 添加圆 2020-08-31 10:16:10 2248 GDraw 添加椭圆 2020-08-31 10:16:14 2248 GDraw 添加多边形 2020-08-31 10:16:19 2248 GDraw 添加文本 2020-08-31 10:16:24 2248 GDraw 添加直线 2020-08-31 10:16:37 2248 GDraw 设置文本 2020-08-31 10:16:37 2248 GDraw 设置文本 2020-08-31 10:16:37 2248 GDraw 设置文本 2020-08-31 10:16:41 2248 GDraw 置底椭圆 2020-08-31 10:16:41 2248 GDraw 置底文本 2020-08-31 10:16:41 2248 GDraw 置底直线 2020-08-31 10:16:45 2248 GDraw 移动椭圆 2020-08-31 10:16:49 2248 GDraw 删除圆 2020-08-31 10:16:52 2248 GDraw 删除圆 2020-08-31 10:16:55 2248 GDraw 粘贴圆 2020-08-31 10:16:59 2248 GDraw 调整圆 2020-08-31 10:17:04 2248 GDraw 调整长方形 2020-08-31 10:17:07 2248 GDraw 调整多边形 2020-08-31 10:17:11 2248 GDraw 调整弧 2020-08-31 10:17:16 2248 GDraw 调整直线 2020-08-31 10:17:44 2248 GDraw 设置文本 2020-08-31 10:17:44 2248 GDraw 设置文本 2020-08-31 10:17:44 2248 GDraw 设置文本 2020-08-31 10:17:48 2248 GDraw 调整文本 2020-08-31 10:17:55 2248 GDraw 删除文本 2020-08-31 10:18:07 2248 GDraw 保存文件 2020-08-31 10:18:08 2248 GDraw 关闭文件 2020-08-31 10:18:08 2248 GDraw 退出 2020-08-31 10:20:14 2248 GDraw timer_duration

过滤掉了不相关的内容,显得清爽多了。这还仅仅是一个产品的埋点数据,如果有多个产品的话,就可以看到更有趣的一些输出,例如:

GDraw : 93 GTJ2021 : 17 GCCP5 : 70 GCCP6 : 154

每个产品会单独统计埋点数,同时在 plot 目录下生成每个产品的数据文件。

不同的日志格式

以为上面就解决了日志清洗的所有问题?No!因为代码的不断的调整,曾经出现过三种格式的日志,下面分别列举:

v2 [1] 2019-09-19 00:39:24 10772 5992 add action GDQ2018.合并 (1) v3 2020-06-11 13:38:34.666 17272 17712 [1] add action GCCP5.timer_duration (1) v3.1 2020-06-11 13:38:34.666 17272 17712 gmtsvc [1] add action GCCP5.timer_duration (1)

具体说我们刚才处理的日志其实是 v3 版本的,另外还有 v2 版本,这个是最老的日志,与 v3 的区别是日志级别是放在最前面的,时间没有毫秒值;还有 v3.1 版本,这个是最新的日志,与 v3 的区别是日志级别之前加了一个模块名称字段。这些字段顺序的调整与额外字段的增加,会导致我们读取时需要选择的字段下标不同,例如下面的 awk 语句分别对应上面三种版本的日志:

# v2 $ sed -n '/add action *.*/p' gux_log.1.txt | awk -F "[. ]+" '{ print $2,$3,$4,$8,$9 >> "plot/"$8".txt"; a[$8]++ } END { for (i in a) print i " : " a[i] }' # v3 $ sed -n '/add action *.*/p' gux_log.1.txt | awk -F "[. ]+" '{ print $1,$2,$4,$9,$10 >> "plot/"$9".txt"; a[$9]++ } END { for (i in a) print i " : " a[i] }' # v3.1 $ sed -n '/add action *.*/p' gux_log.1.txt | awk -F "[. ]+" '{ print $1,$2,$4,$10,$11 >> "plot/"$10".txt"; a[$10]++ } END { for (i in a) print i " : " a[i] }'

它们的输出都是一样的。好在 awk 支持条件判断,我们完全可以在一条语句里进行不同版本的日志处理:

$ sed -n '/add action *.*/p' gux_log.1.txt | awk -F "[. ]+" '{ if ( $1 ~ /\[[0-9]]/ ) { print $2,$3,$4,$8,$9 >> "plot/"$8".txt"; a[$8]++ } else if ( $9 == "action" ) { print $1,$2,$4,$10,$11 >> "plot/"$10".txt"; a[$10]++ } else { print $1,$2,$4,$9,$10 >> "plot/"$9".txt"; a[$9]++ } } END { for (i in a) print i " : " a[i] }'

被压扁在一行了,单独提取 awk 的部分列在下面看着更清晰一些:

1 { 2 if ( $1 ~ /\[[0-9]]/ ) { print $2,$3,$4,$8,$9 >> "plot/"$8".txt"; a[$8]++ } 3 else if ( $9 == "action" ) { print $1,$2,$4,$10,$11 >> "plot/"$10".txt"; a[$10]++ } 4 else { print $1,$2,$4,$9,$10 >> "plot/"$9".txt"; a[$9]++ } 5 } 6 7 END { for (i in a) print i " : " a[i] }

新增的部分主要在条件判断的地方,如果第 1 个字段符合正则式则走第一个条件的语句,这条正则式是说“方括号括起来的数字”,就是[1]、[2]、[3]……啦,这是用来匹配 v2 日志的;如果第 9 个字段为 “action",则走第二个条件的语句,这是用来匹配 v3.1 日志的;剩下的 else 就当然是用来区别 v3 日志的啦。这样一来即使一个文件中有不同版本的日志,也能轻松处理。

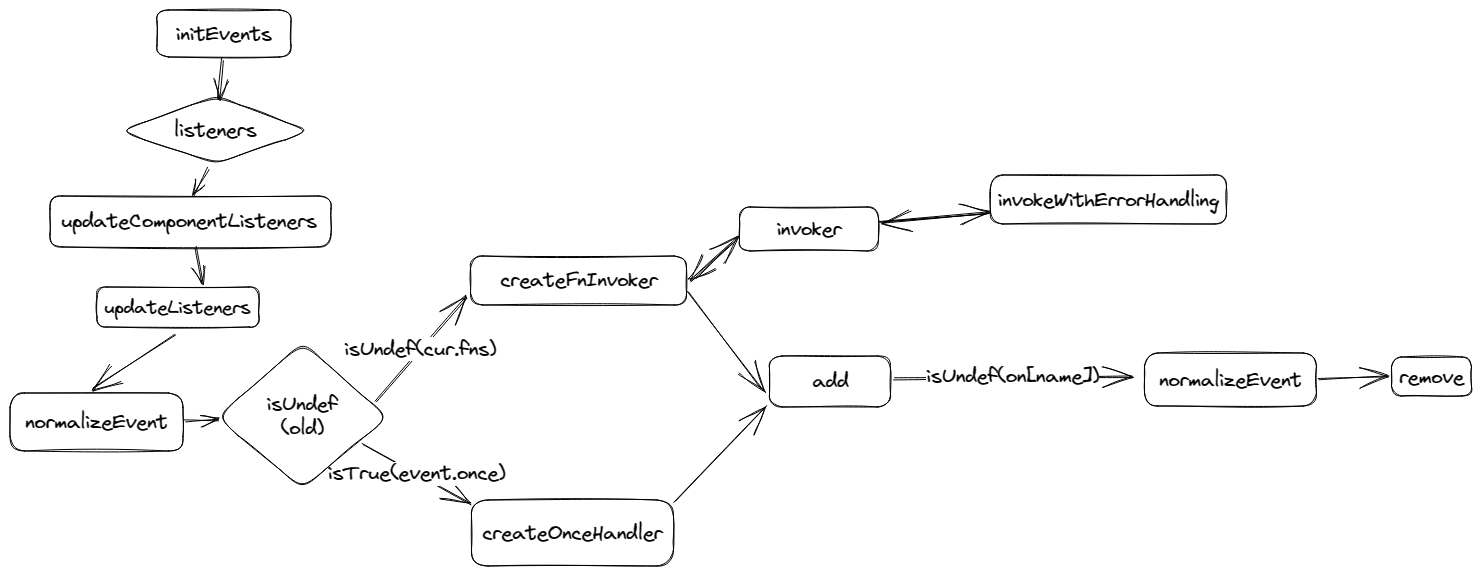

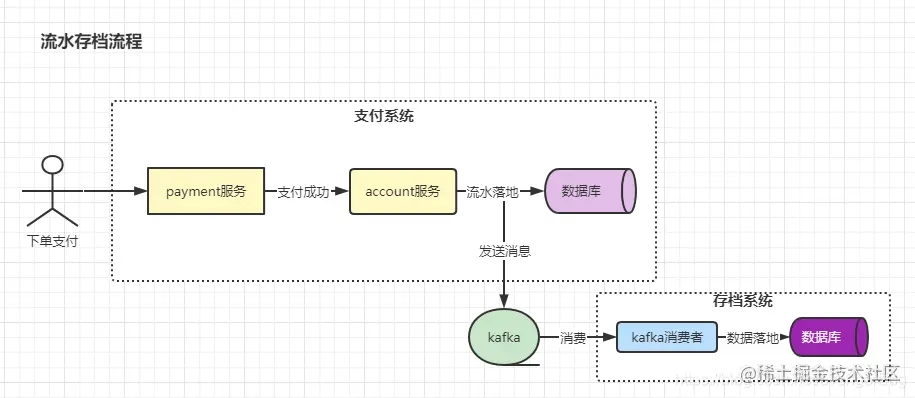

绘制埋点活跃图

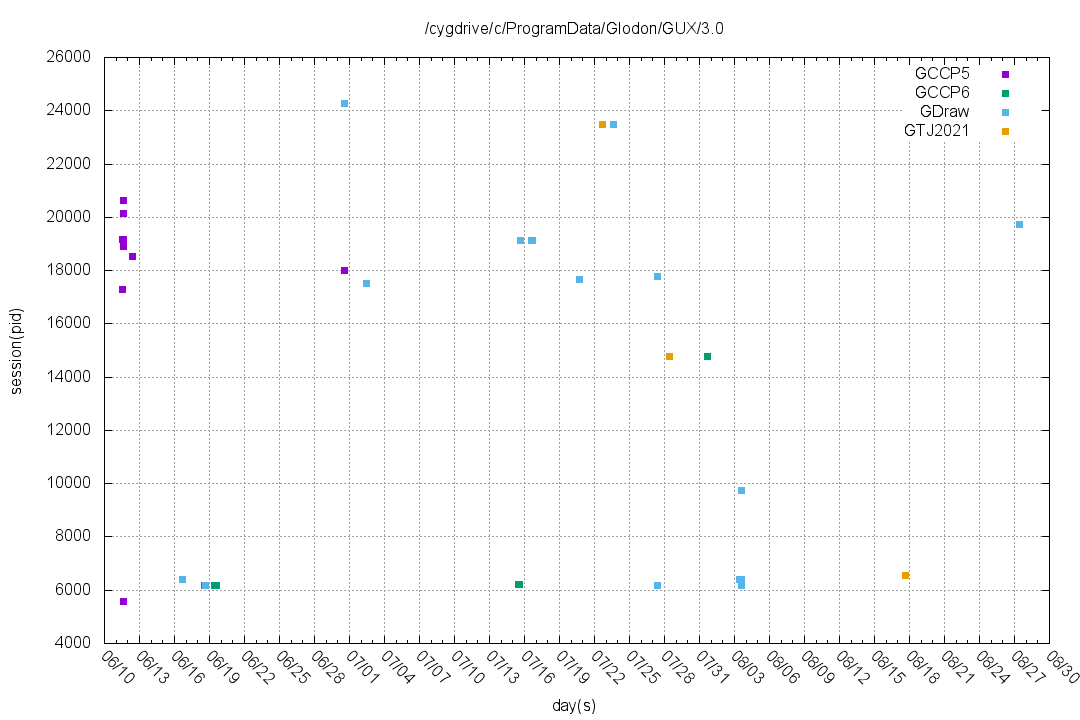

其实经过日志清洗后,得到的各产品"纯纯"的数据,就已经可以交差了,因为没有什么是比数据更准确的啦。但是考虑到我面对的人可能是连 Windows 服务在哪里控制都找不到的人,我决定更进一步,通过各产品的数据绘制一幅直观的埋点图,来给用户一个傻瓜式的体验(谁叫我懒),先上效果图:

这个图的横轴表示时间,纵轴表示会话,可以认为一个产品启动到关闭整个过程为一次会话 (通过进程 ID 值代表)。如果对应的时间段有数据点的话,就表示该产品在那个时间活跃过。以上图为例,如果我们想看 GCCP5 这个产品在哪天活跃过,就可以在图中查找图例对应颜色的点,可以看到,大部分集中在 6.10-6.13 这个区间,其它时间段比较少。又例如,我想查看 8.21-8.27 之间有无产品活跃,从图中看到这段时间区间是一片空白,所以可以确定用户在这个时间段没有使用公司的产品。那么这张图是怎么生成的呢,这就需要用到 gnuplot 了,其实我之前写过的文章有对这个工具使用方法的详细说明,感兴趣的可以参考这篇《查看博客园积分与排名趋势图的工具》。下面直接上代码,首先是 shell 脚本部分 (guxplot.sh):

1 #! /bin/bash 2 arg="logs='" 3 logs=$(cd plot; find . -type f -name "*.txt") 4 if [ -z "$logs" ]; then 5 echo "no data stripped, exit plotting!" 6 exit 7 fi 8 9 for log in $logs 10 do 11 # skip ./ 12 tmp=${log:2} 13 # emit .txt 14 tmp=${tmp%.*} 15 echo $tmp 16 arg="$arg$tmp " 17 done 18 19 arg="$arg'" 20 gnuplot -e "logpath='$1'" -e "$arg" ./gux.plt 21 # mspaint only know \ other than / 22 #mspaint "plot/gux.png" 23 mspaint "plot\\gux.png" &

这个脚本的核心是遍历之前生成数据的 plot 子目录,将其中 .txt 结尾的数据文件取出,依次放入 arg 参数中,将其作为一个参数 (logs) 传递给 gnuplot 脚本 (gux.plt) 去执行。另外它还有一个输入参数 ($1),也作为一个参数 (logpath) 传递给了 gnuplot 脚本。下面来看绘制代码 (gux.plt):

1 #! /usr/bin/gnuplot 2 set terminal png size 1080,720 3 set title logpath 4 set output "plot/gux.png" 5 set grid 6 7 set xdata time 8 set timefmt "%Y-%m-%d %H:%M:%S" 9 set format x "%m/%d" 10 set xtic rotate by 315 # 270 + 45 11 set xtics 86400*3 # three day a big tic 12 set mxtics 3 # every day a small tic 13 14 # plot in double Y axises 15 set xlabel "day(s)" 16 set ylabel "session(pid)" 17 18 #plot "log.txt" using 2:4 with points pt 1 title "score" 19 plot for [log in logs] "plot/".log.".txt" using 1:3 w points pt 5 title log 20 21 quit

简单解读一下:

- 进行一些基本设置 (line 2-6);

- 设置横坐标 (line 7-12),包括设置时间轴、时间格式、横坐标刻度等等;

- 设置坐标名称 (line 15,16);

- 最后一条语句完成所有绘制 (line 19)。

重点说明一下最后一条,正常的数据绘制是如 line 18 所示,有输入数据 (log.txt)、有使用的数据列 (using 2:4)、有展现形式 (with points pt 1)、有图例名称 (score)。它和 19 行的区别在于,后者是一条循环语句,它遍历 logs 参数中的所有值并将当前值存放在 log 变量中 (其实就是产品标识啦),后面它用这个变量拼接出了输入的数据来源 (例如 plot/GDraw.txt,注意 gnuplot 是使用 '.' 来连接字符串与变量的);最后还用这个变量命名了图例的名称 (title log);其它和 18 行没有什么区别。如果对上一个脚本 guxplot.sh 还有印象的话,它一共传递给这个脚本两个参数:logs 和 logpath,刚刚说明的是前者,后者是用来设置标题的,这样就能清楚得知数据来源。

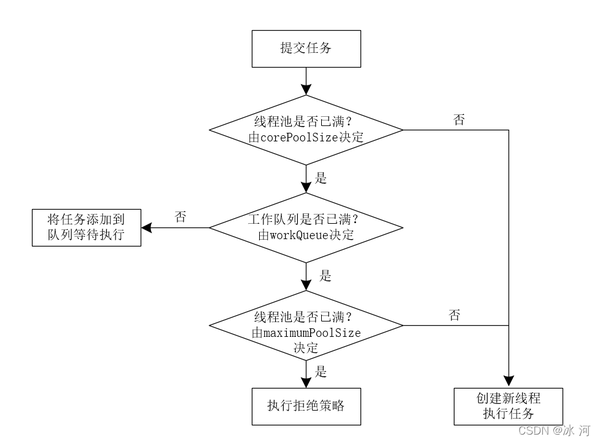

把一切串起来

关键脚本搞清楚之后,我们就可以做总成了,下面是驱动"一切"的那个脚本 (guxstrip.sh) :

1 #! /bin/bash 2 use_local=1 3 gdpdir=$(pwd) 4 logs=$(find . -type f -name "gux_log.*.txt") 5 if [ -z "$logs" ]; then 6 use_local=0 7 echo "no log found under current directory, try system..." 8 gdpdir="/cygdrive/c/ProgramData/Glodon/GUX/3.0" 9 iswinxp=$(Wmic OS Get Caption | grep "XP") 10 if [ ! -z "$iswinxp" ]; then 11 echo "winxp detected!" 12 gdpdir="/cygdrive/c/Documents and Settings/All Users/Application Data/Glodon/GUX/3.0" 13 fi 14 15 logs=$(find "$gdpdir" -type f -name "gux_log.*.txt") 16 if [ -z "$logs" ]; then 17 echo "no log found, nothing to do, quit" 18 exit; 19 fi 20 fi 21 22 n=1 23 sel=-1 24 echo "0: all" 25 for log in $logs 26 do 27 echo "$n: $log $(stat -c '%s' $log) bytes" 28 n=$(($n+1)) 29 done 30 31 until [ $sel -ge 0 ] && [ $sel -lt $n ] 32 do 33 echo -n "which log do you want to strip: (0) " 34 read sel 35 if [ -z "$sel" ]; then 36 sel=0 37 fi 38 done 39 40 rm -rf plot 41 mkdir plot 42 43 n=0 44 for log in $logs 45 do 46 if (( $sel == 0 )) || (( $n == $sel - 1 )); then 47 echo "handle log: $log" 48 echo "handle log: $log" >> "plot/plot.log" 49 sed -n '/add action .*\..*/p' "$log" > "plot/gux.data" 50 if [ ! -s "plot/gux.data" ]; then 51 echo "no valid data in that file, skip" 52 n=$(($n+1)) 53 continue; 54 fi 55 # 2.0 log format 56 # 57 # [1] 2019-09-19 00:39:24 10772 5992 add action GDQ2018.合并 (1) 58 # 59 # 3.0 log format v1 60 # 61 # 2020-06-11 13:38:34.666 17272 17712 [1] add action GCCP5.timer_duration : xxx 62 # 63 # 3.0 log format v2 64 # 65 # 2020-06-11 13:38:34.666 17272 17712 gmtsvc [1] add action GCCP5.timer_duration : xxx 66 # 67 # to adapt all of them 68 awk -F "[. ]+" '{ if ( $1 ~ /\[[0-9]]/ ) { print $2,$3,$4,$8,$9 >> "plot/"$8".txt"; a[$8]++ } else if ( $9 == "action" ) { print $1,$2,$4,$10,$11 >> "plot/"$10".txt"; a[$10]++ } else { print $1,$2,$4,$9,$10 >> "plot/"$9".txt"; a[$9]++ } } END { for (i in a) print i " : " a[i] }' "plot/gux.data" >> "plot/plot.log" 69 70 # prepare the remote file for later compress 71 if [ "$use_local" -eq 0 ]; then 72 cp "$log" "./plot/${log##*/}.log" 73 fi 74 fi 75 n=$(($n+1)) 76 done 77 78 logs=$(find plot -type f -name "*.txt") 79 if [ -z "$logs" ]; then 80 echo "no data stripped, exit plotting!" 81 exit 82 fi 83 84 echo -e "\nstart ploting" 85 bash.exe guxplot.sh "$gdpdir" # 2>>error.txt 86 87 # last pack them up! 88 echo -e "\nstart packing" 89 rm plot.tar.gz 90 rm "plot/gux.data" 91 92 # tar can NOT find gzip in our situation 93 # so use them separately 94 #tar cvzf plot.tar.gz plot 95 tar cvf plot.tar plot 96 gzip plot.tar 97 98 echo "work done!"

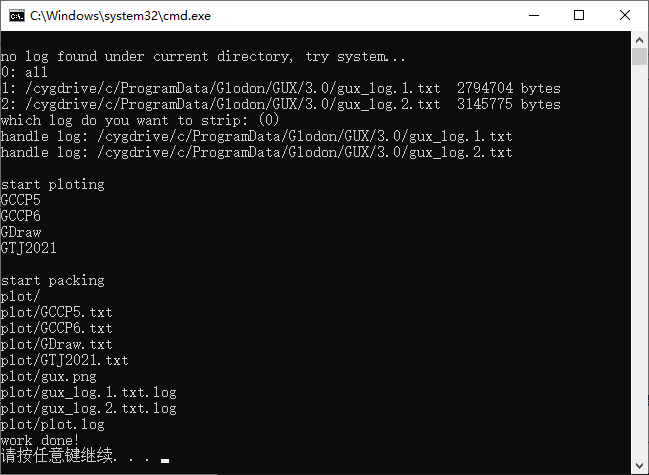

简单解读一下:

- 首先判断输入日志来源 (line 2-20),如果在系统目录下找不到日志,就在本目录查找,这样便于直接分析获取到的日志,而不是去用户机器上装一个工具。如果都找不到,就没得可分析,直接退出。这里系统的话由于WinXP 和 Win7+ 存放的目录不一样,所以要区分一下,注意这个脚本虽然是 shell 脚本,但是是运行在 Windows 系统上的,所以一些 windows 的工具和命令也是可以直接调用的 (Wmic);

- 接着将查找到符合条件的日志名称 一 一 列出,供用户选择,用户可以直接回车 (或输入 0) 表示处理所有日志输入,也可以输入一个序号,表示只处理序号对应的日志。当用户只关心最近的日志数据的话,可以使用后面这个功能来缩小处理的日志时间范围,使生成的活跃图更精确一些;

- 分别处理各个日志 (line 43-76),用到了我们前面介绍的 sed 与 awk,注意添加数据时使用的是追加 (>>),这样可以避免覆盖前面生成的数据。同时如果日志不在当前目录下面,会自动将日志也复制到打包目录,方便万一有问题时继续进行分析;

- 如果没有任何数据文件生成 (line 78-82),表示日志中没有有效的数据,没得分析,退出;

- 绘制活跃图 (line 84,85);

- 将所有的数据和图表压缩成一个 tar 包 (line 87-96),方便后续提交。这时里本来想用 'tar cvzf‘ 一步生成,结果 tar 老是报 gzip 找不到,虽然我已经提供了这个 exe,后来拆分成两步 (tar + gzip) 就好了,可能是 tar 有它自己的查找方式,在移植到 windows 环境后被破坏掉了。

下面是这个脚本的运行截图:

运行结束后会自动弹出埋点活跃图。

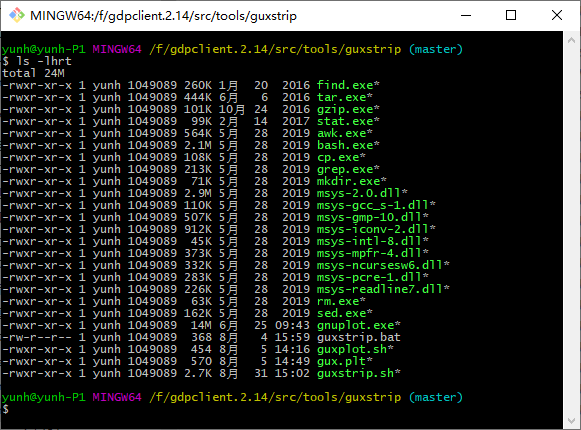

结语

其实前面稍微提到了一点,就是我是在 Windows 环境下运行 shell 脚本的,使用的是 msys2,一种类似 cygwin 的东东,我前几篇文章都有提到过。最终这个日志清洗工具做好后,我只需要把我用到的 msys2 命令提取出来,就可以在新的机器上运行了,既不用安装,也不用注册,完全是个"绿色"版本啊。下面一张工具内容的截图为证:

其中 exe 除了 bash.exe 外,均为脚本中用到的命令;dll 基本是各个 exe 依赖的文件,这个是通过试运行报错后一个一个从 msys2 系统扒过来的;bat、sh 与 plt 基本就是我们的脚本文件啦。整个工具占用空间 23.3 MB,压缩后仅仅 10 MB 多一点,非常便于给用户交付,我想这也是 msys2 系统相比于各种脚本语言最大的优势 —— 不需要安装庞大的语言虚拟机或运行时库,用到什么再添什么,比较小巧灵活,适合我这种简单的任务。

参考

[1]. gnuplot图例legend设置

[2]. gnuplot函数画图

[4]. command line - 如何将命令行参数传递给gnuplot?

[5]. gnuplot的常用技巧

[6]. (4) - 谈谈gnuplot(十四):第二坐标轴

[7]. Using gnuplot to display data in your Web pages

[8]. Chapter 5. Plotting

[10]. gnuplot 让您的数据可视化

[11]. awk模糊匹配统计行数命令的详解

[12]. AWK 简明教程

[13]. awk调用系统命令参数传递的问题

![[学习笔记]PostgreSQL数据库的安装和配置](https://img-blog.csdnimg.cn/f0ca21df3c96432e994935591a898880.png)

![[Java]线程生命周期与线程通信](https://img-blog.csdnimg.cn/direct/8e1a396ad6f2425a8bea15c5fe54c1f3.png)