评分卡案例

数据预处理

%matplotlib inline

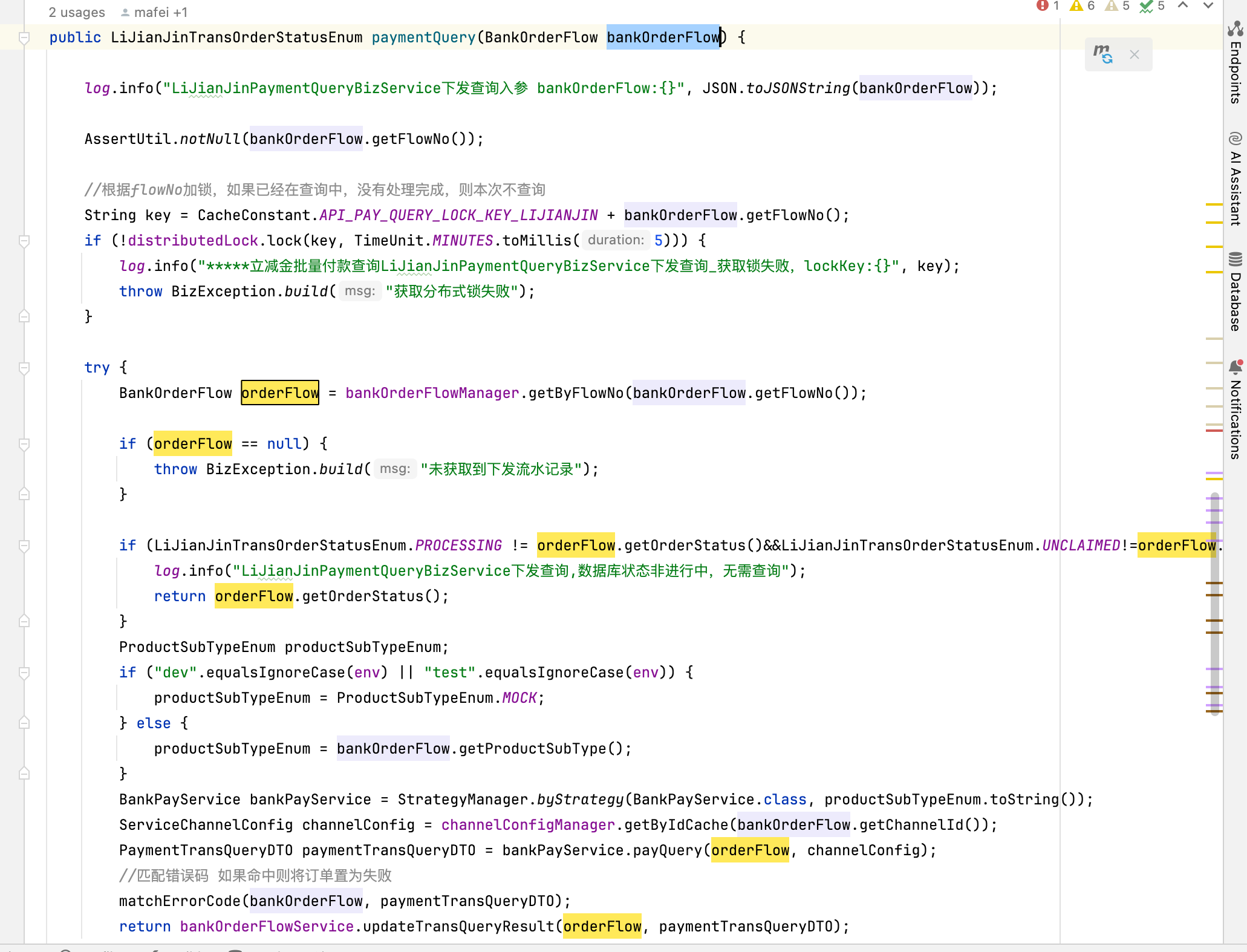

# 导入库

import pandas as pd

import numpy as np

from sklearn.linear_model import LogisticRegression as LR

# 加载数据

data = pd.read_csv("./rankingcard.csv", index_col=0)

data.head()

| SeriousDlqin2yrs | RevolvingUtilizationOfUnsecuredLines | age | NumberOfTime30-59DaysPastDueNotWorse | DebtRatio | MonthlyIncome | NumberOfOpenCreditLinesAndLoans | NumberOfTimes90DaysLate | NumberRealEstateLoansOrLines | NumberOfTime60-89DaysPastDueNotWorse | NumberOfDependents | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 0.766127 | 45 | 2 | 0.802982 | 9120.0 | 13 | 0 | 6 | 0 | 2.0 |

| 2 | 0 | 0.957151 | 40 | 0 | 0.121876 | 2600.0 | 4 | 0 | 0 | 0 | 1.0 |

| 3 | 0 | 0.658180 | 38 | 1 | 0.085113 | 3042.0 | 2 | 1 | 0 | 0 | 0.0 |

| 4 | 0 | 0.233810 | 30 | 0 | 0.036050 | 3300.0 | 5 | 0 | 0 | 0 | 0.0 |

| 5 | 0 | 0.907239 | 49 | 1 | 0.024926 | 63588.0 | 7 | 0 | 1 | 0 | 0.0 |

# 删除重复值

data.drop_duplicates(inplace=True)

# 查看缺失值占比

data.isnull().sum() / data.shape[0]

SeriousDlqin2yrs 0.000000

RevolvingUtilizationOfUnsecuredLines 0.000000

age 0.000000

NumberOfTime30-59DaysPastDueNotWorse 0.000000

DebtRatio 0.000000

MonthlyIncome 0.195601

NumberOfOpenCreditLinesAndLoans 0.000000

NumberOfTimes90DaysLate 0.000000

NumberRealEstateLoansOrLines 0.000000

NumberOfTime60-89DaysPastDueNotWorse 0.000000

NumberOfDependents 0.025624

dtype: float64

# 删除缺失值

data.drop(index=data[data["NumberOfDependents"].isna() == True].index, inplace=True)

# 重建索引

data.index = range(data.shape[0])

data

type(data)

pandas.core.frame.DataFrame

# 封装填充缺失值函数

def fill_miss_rfr(x, y, to_fill: str, n_estimators: int) -> np.ndarray:

"""

Args:

x (DataFram): 要填补的特征矩阵

y (Serise): 完整的,没有缺失值的标签

to_fill (str): 字符串,要填补的那一列的名称

n_estimators (int):随机森林树的数量

"""

# 构建新特征矩阵和新标签

df = x.copy()

fill = df.loc[:, to_fill]

df = pd.concat([df.loc[:, df.columns != to_fill], pd.DataFrame(y)], axis=1)

# 划分训练集和测试集

y_train = fill[fill.notna()]

y_test = fill[fill.isna()]

x_train = df.iloc[y_train.index, :]

x_test = df.iloc[y_test.index, :]

# 使用随机森林填充缺失值

from sklearn.ensemble import RandomForestRegressor as RFR

rfr = RFR(n_estimators=n_estimators)

rfr.fit(x_train, y_train)

y_predict = rfr.predict(x_test)

return y_predict

# 准备参数

x = data.iloc[:, 1:]

y = data.iloc[:, 1]

# 获取缺失值MonthlyIncome

y_predict = fill_miss_rfr(x, y, "MonthlyIncome", 100)

# 填补缺失值MonthlyIncome

data.loc[data.loc[:, "MonthlyIncome"].isna(), "MonthlyIncome"] = y_predict

描述性统计处理异常值

data.describe([0.25, 0.5, 0.75, 0.90]).T

| count | mean | std | min | 25% | 50% | 75% | 90% | max | |

|---|---|---|---|---|---|---|---|---|---|

| SeriousDlqin2yrs | 145563.0 | 0.067538 | 0.250952 | 0.0 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 1.0 |

| RevolvingUtilizationOfUnsecuredLines | 145563.0 | 5.941378 | 250.510835 | 0.0 | 0.031218 | 0.158817 | 0.561085 | 0.976557 | 50708.0 |

| age | 145563.0 | 52.110701 | 14.567652 | 0.0 | 41.000000 | 52.000000 | 62.000000 | 72.000000 | 107.0 |

| NumberOfTime30-59DaysPastDueNotWorse | 145563.0 | 0.389185 | 3.756944 | 0.0 | 0.000000 | 0.000000 | 0.000000 | 1.000000 | 98.0 |

| DebtRatio | 145563.0 | 334.548251 | 1947.228209 | 0.0 | 0.173934 | 0.359090 | 0.770641 | 1150.000000 | 329664.0 |

| MonthlyIncome | 145563.0 | 5552.682762 | 13384.682061 | 0.0 | 2000.000000 | 4500.000000 | 7500.000000 | 10841.800000 | 3008750.0 |

| NumberOfOpenCreditLinesAndLoans | 145563.0 | 8.553788 | 5.141132 | 0.0 | 5.000000 | 8.000000 | 11.000000 | 15.000000 | 58.0 |

| NumberOfTimes90DaysLate | 145563.0 | 0.231309 | 3.728803 | 0.0 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 98.0 |

| NumberRealEstateLoansOrLines | 145563.0 | 1.033346 | 1.133115 | 0.0 | 0.000000 | 1.000000 | 2.000000 | 2.000000 | 54.0 |

| NumberOfTime60-89DaysPastDueNotWorse | 145563.0 | 0.205622 | 3.712455 | 0.0 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 98.0 |

| NumberOfDependents | 145563.0 | 0.759863 | 1.116141 | 0.0 | 0.000000 | 0.000000 | 1.000000 | 2.000000 | 20.0 |

# 一个年龄为0的人,需要将他删掉

(data["age"] == 0).sum()

data = data[data["age"] != 0]

data = data[data.loc[:, "NumberOfTimes90DaysLate"] < 90]

# 重置索引

data.index = range(data.shape[0])

data.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 145354 entries, 0 to 145353

Data columns (total 11 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 SeriousDlqin2yrs 145354 non-null int64

1 RevolvingUtilizationOfUnsecuredLines 145354 non-null float64

2 age 145354 non-null int64

3 NumberOfTime30-59DaysPastDueNotWorse 145354 non-null int64

4 DebtRatio 145354 non-null float64

5 MonthlyIncome 145354 non-null float64

6 NumberOfOpenCreditLinesAndLoans 145354 non-null int64

7 NumberOfTimes90DaysLate 145354 non-null int64

8 NumberRealEstateLoansOrLines 145354 non-null int64

9 NumberOfTime60-89DaysPastDueNotWorse 145354 non-null int64

10 NumberOfDependents 145354 non-null float64

dtypes: float64(4), int64(7)

memory usage: 12.2 MB

查看样本均衡问题

x = data.iloc[:, 1:]

y = data.iloc[:, 0]

sample_n = y.value_counts().sum()

sample_0 = y.value_counts()[0]

sample_1 = y.value_counts()[1]

print(

"样本总数: {}, 样本0占比:{:.2%}, 样本1占比:{:.2%}".format(

sample_n, sample_0 / sample_n, sample_1 / sample_n

)

)

样本总数: 145354, 样本0占比:93.32%, 样本1占比:6.68%

# 增加样本1的占比

from imblearn.over_sampling import SMOTE

sm = SMOTE(random_state=42)

x, y = sm.fit_resample(x, y)

sample_n = y.value_counts().sum()

sample_0 = y.value_counts()[0]

sample_1 = y.value_counts()[1]

print(

"样本总数: {}, 样本0占比:{:.2%}, 样本1占比:{:.2%}".format(

sample_n, sample_0 / sample_n, sample_1 / sample_n

)

)

样本总数: 271296, 样本0占比:50.00%, 样本1占比:50.00%

data

| SeriousDlqin2yrs | RevolvingUtilizationOfUnsecuredLines | age | NumberOfTime30-59DaysPastDueNotWorse | DebtRatio | MonthlyIncome | NumberOfOpenCreditLinesAndLoans | NumberOfTimes90DaysLate | NumberRealEstateLoansOrLines | NumberOfTime60-89DaysPastDueNotWorse | NumberOfDependents | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0.766127 | 45 | 2 | 0.802982 | 9120.0 | 13 | 0 | 6 | 0 | 2.0 |

| 1 | 0 | 0.957151 | 40 | 0 | 0.121876 | 2600.0 | 4 | 0 | 0 | 0 | 1.0 |

| 2 | 0 | 0.658180 | 38 | 1 | 0.085113 | 3042.0 | 2 | 1 | 0 | 0 | 0.0 |

| 3 | 0 | 0.233810 | 30 | 0 | 0.036050 | 3300.0 | 5 | 0 | 0 | 0 | 0.0 |

| 4 | 0 | 0.907239 | 49 | 1 | 0.024926 | 63588.0 | 7 | 0 | 1 | 0 | 0.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 145349 | 0 | 0.040674 | 74 | 0 | 0.225131 | 2100.0 | 4 | 0 | 1 | 0 | 0.0 |

| 145350 | 0 | 0.299745 | 44 | 0 | 0.716562 | 5584.0 | 4 | 0 | 1 | 0 | 2.0 |

| 145351 | 0 | 0.246044 | 58 | 0 | 3870.000000 | 0.0 | 18 | 0 | 1 | 0 | 0.0 |

| 145352 | 0 | 0.000000 | 30 | 0 | 0.000000 | 5716.0 | 4 | 0 | 0 | 0 | 0.0 |

| 145353 | 0 | 0.850283 | 64 | 0 | 0.249908 | 8158.0 | 8 | 0 | 2 | 0 | 0.0 |

145354 rows × 11 columns

划分测试集和训练集并为分箱做准备

from sklearn.model_selection import train_test_split as tts

x_train, x_vali, y_train, y_vali = tts(x, y, test_size=0.3, random_state=420)

model_data = pd.concat([y_train, x_train], axis=1)

model_data.index = range(model_data.shape[0])

model_data.columns = data.columns

model_vali = pd.concat([y_vali, x_vali], axis=1)

model_vali.index = range(model_vali.shape[0])

model_vali.columns = data.columns

model_data.to_csv("./model_data.csv")

model_vali.to_csv("./model_vali.csv")

分箱

等频分箱

# 按照等频对箱子进行分箱

model_data["qcut"], updown = pd.qcut(model_data["age"], retbins=True, q=20)

coount_0 = (

model_data[model_data["SeriousDlqin2yrs"] == 0]

.groupby(by=model_data["qcut"])

.count()["SeriousDlqin2yrs"]

)

coount_1 = (

model_data[model_data["SeriousDlqin2yrs"] == 1]

.groupby(by=model_data["qcut"])

.count()["SeriousDlqin2yrs"]

)

num_bins = [*zip(updown, updown[1:], coount_0, coount_1)]

num_bins

[(21.0, 28.0, 4121, 7178),

(28.0, 31.0, 3476, 5783),

(31.0, 34.0, 3933, 6662),

(34.0, 36.0, 2896, 4652),

(36.0, 39.0, 5108, 7417),

(39.0, 41.0, 3963, 5843),

(41.0, 43.0, 3957, 5558),

(43.0, 45.0, 4317, 5691),

(45.0, 46.0, 2342, 3240),

(46.0, 48.0, 4864, 6150),

(48.0, 50.0, 4861, 6038),

(50.0, 52.0, 4621, 5523),

(52.0, 54.0, 4560, 4623),

(54.0, 56.0, 4498, 4010),

(56.0, 58.0, 4468, 3359),

(58.0, 61.0, 6451, 4746),

(61.0, 63.0, 4712, 2228),

(63.0, 67.0, 6974, 2578),

(67.0, 73.0, 6975, 2041),

(73.0, 107.0, 7998, 1492)]

定义 WOE 和 IV 函数

# 获取WOE

columns = ["min", "max", "count_0", "count_1"]

df = pd.DataFrame(num_bins, columns=columns)

df["tatol"] = df.count_0 + df.count_1

df["percentage"] = df.tatol / df.tatol.sum()

df["bad_rate"] = df.count_1 / df.tatol

df["good%"] = df.count_0 / df.count_0.sum()

df["bad%"] = df.count_1 / df.count_1.sum()

df["woe"] = np.log(df["good%"] / df["bad%"])

# 获取IV

rate = df["good%"] - df["bad%"]

iv = np.sum(rate * df["woe"])

# 封装 WOE 和 IV 函数

def get_woe(data: list) -> pd.DataFrame:

"""通过 num_bins 数据计算 woe

Args:

data (list): _description_

Returns:

pd.DataFrame: _description_

"""

df = data.copy()

columns = ["min", "max", "count_0", "count_1"]

df = pd.DataFrame(df, columns=columns)

df["tatol"] = df.count_0 + df.count_1

df["percentage"] = df.tatol / df.tatol.sum()

df["bad_rate"] = df.count_1 / df.tatol

df["good%"] = df.count_0 / df.count_0.sum()

df["bad%"] = df.count_1 / df.count_1.sum()

df["woe"] = np.log(df["good%"] / df["bad%"])

return df

def get_iv(data: pd.DataFrame) -> float:

"""计算IV值

Args:

data (pd.DataFrame): _description_

Returns:

float: _description_

"""

rate = data["good%"] - data["bad%"]

iv = np.sum(rate * data["woe"])

return iv

get_iv(get_woe(num_bins))

0.3451906890667462

num_bins_ = num_bins.copy()

[*zip(range(len(num_bins_)), num_bins_)]

[(0, (21.0, 28.0, 4121, 7178)),

(1, (28.0, 31.0, 3476, 5783)),

(2, (31.0, 34.0, 3933, 6662)),

(3, (34.0, 36.0, 2896, 4652)),

(4, (36.0, 39.0, 5108, 7417)),

(5, (39.0, 41.0, 3963, 5843)),

(6, (41.0, 43.0, 3957, 5558)),

(7, (43.0, 45.0, 4317, 5691)),

(8, (45.0, 46.0, 2342, 3240)),

(9, (46.0, 48.0, 4864, 6150)),

(10, (48.0, 50.0, 4861, 6038)),

(11, (50.0, 52.0, 4621, 5523)),

(12, (52.0, 54.0, 4560, 4623)),

(13, (54.0, 56.0, 4498, 4010)),

(14, (56.0, 58.0, 4468, 3359)),

(15, (58.0, 61.0, 6451, 4746)),

(16, (61.0, 63.0, 4712, 2228)),

(17, (63.0, 67.0, 6974, 2578)),

(18, (67.0, 73.0, 6975, 2041)),

(19, (73.0, 107.0, 7998, 1492))]

卡方检验,合并箱体,画出 IV 曲线

# 卡方检验

import scipy.stats as ss

x1 = num_bins_[0][2:]

x2 = num_bins_[1][2:]

pvs = ss.chi2_contingency([x1, x2])[1]

pvs_list = []

for i in range(len(num_bins_) - 1):

x1 = num_bins_[i][2:]

x2 = num_bins_[i + 1][2:]

pvs = ss.chi2_contingency([x1, x2])[1]

pvs_list.append(pvs)

pvs_list

pvs_list

[0.11728724900429034,

0.5508163222314948,

0.09053121716062464,

0.0007530919909110494,

0.5874212014912026,

0.10044784335748964,

0.029701216027069655,

0.15850408664347226,

0.007103486255692831,

0.5224541867703053,

0.16902174569691014,

1.2731738769229555e-08,

2.1014725165904846e-05,

6.866926043454617e-08,

0.476802189877255,

1.944497287524474e-43,

1.0901328872969334e-12,

8.002706776208699e-12,

6.800451443744261e-33]

# 合并卡方检验相似的两个箱子

i = pvs_list.index(max(pvs_list))

num_bins_[i : i + 2] = [

(

num_bins_[i][0],

num_bins_[i + 2][0],

num_bins_[i][2] + num_bins_[i + 1][2],

num_bins_[i][3] + num_bins_[i + 1][3],

)

]

import matplotlib.pyplot as plt

IV = []

axisx = []

while len(num_bins_) > 2:

pvs = []

# 获取 num_bins_两两之间的卡方检验的置信度(或卡方值)

for i in range(len(num_bins_) - 1):

x1 = num_bins_[i][2:]

x2 = num_bins_[i + 1][2:]

# 0 返回 chi2 值,1 返回 p 值。

pv = ss.chi2_contingency([x1, x2])[1]

# chi2 = scipy.stats.chi2_contingency([x1,x2])[0]

pvs.append(pv)

# 通过 p 值进行处理。合并 p 值最大的两组

i = pvs.index(max(pvs))

num_bins_[i : i + 2] = [

(

num_bins_[i][0],

num_bins_[i + 1][1],

num_bins_[i][2] + num_bins_[i + 1][2],

num_bins_[i][3] + num_bins_[i + 1][3],

)

]

bins_df = get_woe(num_bins_)

axisx.append(len(num_bins_))

IV.append(get_iv(bins_df))

plt.figure()

plt.plot(axisx, IV)

plt.xticks(axisx)

plt.xlabel("number of box")

plt.ylabel("IV")

plt.show()

# 封装分箱函数

def get_bin(num_bins_: list, n: str) -> list:

"""分箱函数

Args:

num_bins (list): _description_

n (str): _description_

Returns:

list: _description_

"""

while len(num_bins_) > n:

pvs = []

# 获取 num_bins_两两之间的卡方检验的置信度(或卡方值)

for i in range(len(num_bins_) - 1):

x1 = num_bins_[i][2:]

x2 = num_bins_[i + 1][2:]

# 0 返回 chi2 值,1 返回 p 值。

pv = ss.chi2_contingency([x1, x2])[1]

# chi2 = scipy.stats.chi2_contingency([x1,x2])[0]

pvs.append(pv)

# 通过 p 值进行处理。合并 p 值最大的两组

i = pvs.index(max(pvs))

num_bins_[i : i + 2] = [

(

num_bins_[i][0],

num_bins_[i + 1][1],

num_bins_[i][2] + num_bins_[i + 1][2],

num_bins_[i][3] + num_bins_[i + 1][3],

)

]

return num_bins_

get_woe(get_bin(num_bins, 5))

| min | max | count_0 | count_1 | tatol | percentage | bad_rate | good% | bad% | woe | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 21.0 | 36.0 | 14426 | 24275 | 38701 | 0.203789 | 0.627245 | 0.151701 | 0.256033 | -0.523395 |

| 1 | 36.0 | 52.0 | 34033 | 45460 | 79493 | 0.418589 | 0.571874 | 0.357884 | 0.479475 | -0.292483 |

| 2 | 52.0 | 61.0 | 19977 | 16738 | 36715 | 0.193331 | 0.455890 | 0.210074 | 0.176539 | 0.173920 |

| 3 | 61.0 | 73.0 | 18661 | 6847 | 25508 | 0.134318 | 0.268426 | 0.196235 | 0.072217 | 0.999645 |

| 4 | 73.0 | 107.0 | 7998 | 1492 | 9490 | 0.049972 | 0.157218 | 0.084105 | 0.015736 | 1.676094 |

import scipy

def graphforbestbin(DF, X, Y, n=5, q=20, graph=True):

"""

自动最优分箱函数,基于卡方检验的分箱

参数:

DF: 需要输入的数据

X: 需要分箱的列名

Y: 分箱数据对应的标签 Y 列名

n: 保留分箱个数

q: 初始分箱的个数

graph: 是否要画出IV图像

区间为前开后闭 (]

"""

global bins_df

DF = DF[[X, Y]].copy()

DF["qcut"], bins = pd.qcut(DF[X], retbins=True, q=q, duplicates="drop")

coount_y0 = DF.loc[DF[Y] == 0].groupby(by="qcut").count()[Y]

coount_y1 = DF.loc[DF[Y] == 1].groupby(by="qcut").count()[Y]

num_bins = [*zip(bins, bins[1:], coount_y0, coount_y1)]

for i in range(q):

if 0 in num_bins[0][2:]:

num_bins[0:2] = [

(

num_bins[0][0],

num_bins[1][1],

num_bins[0][2] + num_bins[1][2],

num_bins[0][3] + num_bins[1][3],

)

]

continue

for i in range(len(num_bins)):

if 0 in num_bins[i][2:]:

num_bins[i - 1 : i + 1] = [

(

num_bins[i - 1][0],

num_bins[i][1],

num_bins[i - 1][2] + num_bins[i][2],

num_bins[i - 1][3] + num_bins[i][3],

)

]

break

else:

break

def get_woe(num_bins):

columns = ["min", "max", "count_0", "count_1"]

df = pd.DataFrame(num_bins, columns=columns)

df["total"] = df.count_0 + df.count_1

df["percentage"] = df.total / df.total.sum()

df["bad_rate"] = df.count_1 / df.total

df["good%"] = df.count_0 / df.count_0.sum()

df["bad%"] = df.count_1 / df.count_1.sum()

df["woe"] = np.log(df["good%"] / df["bad%"])

return df

def get_iv(df):

rate = df["good%"] - df["bad%"]

iv = np.sum(rate * df.woe)

return iv

IV = []

axisx = []

while len(num_bins) > n:

pvs = []

for i in range(len(num_bins) - 1):

x1 = num_bins[i][2:]

x2 = num_bins[i + 1][2:]

pv = scipy.stats.chi2_contingency([x1, x2])[1]

pvs.append(pv)

i = pvs.index(max(pvs))

num_bins[i : i + 2] = [

(

num_bins[i][0],

num_bins[i + 1][1],

num_bins[i][2] + num_bins[i + 1][2],

num_bins[i][3] + num_bins[i + 1][3],

)

]

bins_df = pd.DataFrame(get_woe(num_bins))

axisx.append(len(num_bins))

IV.append(get_iv(bins_df))

if graph:

plt.figure()

plt.plot(axisx, IV)

plt.xticks(axisx)

plt.xlabel("number of box")

plt.ylabel("IV")

plt.show()

return bins_df

dfs = []

for i in model_data.columns[1:-1]:

print(i)

a = graphforbestbin(DF=model_data, X=i, Y="SeriousDlqin2yrs", graph=False)

dfs.append(a)

RevolvingUtilizationOfUnsecuredLines

age

NumberOfTime30-59DaysPastDueNotWorse

DebtRatio

MonthlyIncome

NumberOfOpenCreditLinesAndLoans

NumberOfTimes90DaysLate

NumberRealEstateLoansOrLines

NumberOfTime60-89DaysPastDueNotWorse

NumberOfDependents

for i in dfs:

print(i)

min max count_0 count_1 total percentage bad_rate \

0 0.000000 0.102453 42746 4731 47477 0.250001 0.099648

1 0.102453 0.224921 13207 5784 18991 0.100002 0.304565

2 0.224921 0.553524 17987 19994 37981 0.199998 0.526421

3 0.553524 1.000000 19729 53108 72837 0.383540 0.729135

4 1.000000 29110.000000 1426 11195 12621 0.066459 0.887014

good% bad% woe

0 0.449508 0.049899 2.198159

1 0.138882 0.061005 0.822671

2 0.189148 0.210880 -0.108763

3 0.207466 0.560140 -0.993218

4 0.014996 0.118076 -2.063574

min max count_0 count_1 total percentage bad_rate good% \

0 21.0 36.0 14426 24275 38701 0.203789 0.627245 0.151701

1 36.0 52.0 34033 45460 79493 0.418589 0.571874 0.357884

2 52.0 61.0 19977 16738 36715 0.193331 0.455890 0.210074

3 61.0 73.0 18661 6847 25508 0.134318 0.268426 0.196235

4 73.0 107.0 7998 1492 9490 0.049972 0.157218 0.084105

bad% woe

0 0.256033 -0.523395

1 0.479475 -0.292483

2 0.176539 0.173920

3 0.072217 0.999645

4 0.015736 1.676094

min max count_0 count_1 total percentage bad_rate good% \

0 21.0 36.0 14426 24275 38701 0.203789 0.627245 0.151701

1 36.0 52.0 34033 45460 79493 0.418589 0.571874 0.357884

2 52.0 61.0 19977 16738 36715 0.193331 0.455890 0.210074

3 61.0 73.0 18661 6847 25508 0.134318 0.268426 0.196235

4 73.0 107.0 7998 1492 9490 0.049972 0.157218 0.084105

bad% woe

0 0.256033 -0.523395

1 0.479475 -0.292483

2 0.176539 0.173920

3 0.072217 0.999645

4 0.015736 1.676094

min max count_0 count_1 total percentage bad_rate \

0 0.000000 0.017546 7816 1680 9496 0.050003 0.176917

1 0.017546 0.490044 52595 51853 104448 0.549996 0.496448

2 0.490044 1.120358 14828 23153 37981 0.199998 0.609594

3 1.120358 837.000000 8988 10006 18994 0.100017 0.526798

4 837.000000 329664.000000 10868 8120 18988 0.099986 0.427639

good% bad% woe

0 0.082191 0.017719 1.534399

1 0.553079 0.546903 0.011228

2 0.155928 0.244199 -0.448587

3 0.094516 0.105535 -0.110275

4 0.114286 0.085643 0.288512

min max count_0 count_1 total percentage bad_rate \

0 0.000000 9.000000e-02 6585 3001 9586 0.050477 0.313061

1 0.090000 1.419000e+03 13754 14643 28397 0.149531 0.515653

2 1419.000000 4.667000e+03 27842 38638 66480 0.350066 0.581197

3 4667.000000 6.238526e+03 13996 14476 28472 0.149926 0.508429

4 6238.526439 3.008750e+06 32918 24054 56972 0.299999 0.422207

good% bad% woe

0 0.069247 0.031652 0.782868

1 0.144634 0.154442 -0.065613

2 0.292781 0.407522 -0.330671

3 0.147179 0.152681 -0.036701

4 0.346159 0.253702 0.310738

min max count_0 count_1 total percentage bad_rate good% \

0 0.0 1.0 3104 7758 10862 0.057196 0.714233 0.032641

1 1.0 3.0 9412 13478 22890 0.120533 0.588816 0.098975

2 3.0 5.0 15485 16514 31999 0.168498 0.516079 0.162837

3 5.0 17.0 61589 53679 115268 0.606971 0.465689 0.647658

4 17.0 58.0 5505 3383 8888 0.046802 0.380626 0.057889

bad% woe

0 0.081825 -0.919013

1 0.142155 -0.362054

2 0.174176 -0.067317

3 0.566163 0.134481

4 0.035681 0.483913

min max count_0 count_1 total percentage bad_rate good% \

0 0.0 1.0 3104 7758 10862 0.057196 0.714233 0.032641

1 1.0 3.0 9412 13478 22890 0.120533 0.588816 0.098975

2 3.0 5.0 15485 16514 31999 0.168498 0.516079 0.162837

3 5.0 17.0 61589 53679 115268 0.606971 0.465689 0.647658

4 17.0 58.0 5505 3383 8888 0.046802 0.380626 0.057889

bad% woe

0 0.081825 -0.919013

1 0.142155 -0.362054

2 0.174176 -0.067317

3 0.566163 0.134481

4 0.035681 0.483913

min max count_0 count_1 total percentage bad_rate good% \

0 0.0 1.0 3104 7758 10862 0.057196 0.714233 0.032641

1 1.0 3.0 9412 13478 22890 0.120533 0.588816 0.098975

2 3.0 5.0 15485 16514 31999 0.168498 0.516079 0.162837

3 5.0 17.0 61589 53679 115268 0.606971 0.465689 0.647658

4 17.0 58.0 5505 3383 8888 0.046802 0.380626 0.057889

bad% woe

0 0.081825 -0.919013

1 0.142155 -0.362054

2 0.174176 -0.067317

3 0.566163 0.134481

4 0.035681 0.483913

min max count_0 count_1 total percentage bad_rate good% \

0 0.0 1.0 3104 7758 10862 0.057196 0.714233 0.032641

1 1.0 3.0 9412 13478 22890 0.120533 0.588816 0.098975

2 3.0 5.0 15485 16514 31999 0.168498 0.516079 0.162837

3 5.0 17.0 61589 53679 115268 0.606971 0.465689 0.647658

4 17.0 58.0 5505 3383 8888 0.046802 0.380626 0.057889

bad% woe

0 0.081825 -0.919013

1 0.142155 -0.362054

2 0.174176 -0.067317

3 0.566163 0.134481

4 0.035681 0.483913

min max count_0 count_1 total percentage bad_rate good% \

0 0.0 1.0 3104 7758 10862 0.057196 0.714233 0.032641

1 1.0 3.0 9412 13478 22890 0.120533 0.588816 0.098975

2 3.0 5.0 15485 16514 31999 0.168498 0.516079 0.162837

3 5.0 17.0 61589 53679 115268 0.606971 0.465689 0.647658

4 17.0 58.0 5505 3383 8888 0.046802 0.380626 0.057889

bad% woe

0 0.081825 -0.919013

1 0.142155 -0.362054

2 0.174176 -0.067317

3 0.566163 0.134481

4 0.035681 0.483913

auto_col_bins = {

"RevolvingUtilizationOfUnsecuredLines": 6,

"age": 5,

"DebtRatio": 4,

"MonthlyIncome": 3,

"NumberOfOpenCreditLinesAndLoans": 5,

}

# 不能使用自动分箱的变量

hand_bins = {

"NumberOfTime30-59DaysPastDueNotWorse": [0, 1, 2, 13],

"NumberOfTimes90DaysLate": [0, 1, 2, 17],

"NumberRealEstateLoansOrLines": [0, 1, 2, 4, 54],

"NumberOfTime60-89DaysPastDueNotWorse": [0, 1, 2, 8],

"NumberOfDependents": [0, 1, 2, 3],

}

# 保证区间覆盖使用 np.inf替换最大值,用-np.inf替换最小值

hand_bins = {k: [-np.inf, *v[:-1], np.inf] for k, v in hand_bins.items()}

bins_of_col = {}

# 生成自动分箱的分箱区间和分箱后的 IV 值

for col in auto_col_bins:

bins_df = graphforbestbin(

model_data,

col,

"SeriousDlqin2yrs",

n=auto_col_bins[col]

# 使用字典的性质来取出每个特征所对应的箱的数量

,

q=20,

graph=False,

)

bins_list = sorted(set(bins_df["min"]).union(bins_df["max"]))

# 保证区间覆盖使用 np.inf 替换最大值 -np.inf 替换最小值

bins_list[0], bins_list[-1] = -np.inf, np.inf

bins_of_col[col] = bins_list

# 合并手动分箱数据

bins_of_col.update(hand_bins)

bins_of_col

{'RevolvingUtilizationOfUnsecuredLines': [-inf,

0.1024533425,

0.2249209732,

0.5535236390041033,

0.9829105421203392,

0.9999999,

inf],

'age': [-inf, 36.0, 52.0, 61.0, 73.0, inf],

'DebtRatio': [-inf, 0.017546052905140292, 0.4900438860450557, 837.0, inf],

'MonthlyIncome': [-inf, 0.09, 6238.526438865782, inf],

'NumberOfOpenCreditLinesAndLoans': [-inf, 1.0, 3.0, 5.0, 17.0, inf],

'NumberOfTime30-59DaysPastDueNotWorse': [-inf, 0, 1, 2, inf],

'NumberOfTimes90DaysLate': [-inf, 0, 1, 2, inf],

'NumberRealEstateLoansOrLines': [-inf, 0, 1, 2, 4, inf],

'NumberOfTime60-89DaysPastDueNotWorse': [-inf, 0, 1, 2, inf],

'NumberOfDependents': [-inf, 0, 1, 2, inf]}

data = model_data.copy()

data["cut"] = pd.cut(data["age"], bins_of_col["age"])

data.groupby("cut")["SeriousDlqin2yrs"].value_counts().unstack()

bins_df = data.groupby("cut")["SeriousDlqin2yrs"].value_counts().unstack()

bins_df

| SeriousDlqin2yrs | 0 | 1 |

|---|---|---|

| cut | ||

| (-inf, 36.0] | 14426 | 24275 |

| (36.0, 52.0] | 34033 | 45460 |

| (52.0, 61.0] | 19977 | 16738 |

| (61.0, 73.0] | 18661 | 6847 |

| (73.0, inf] | 7998 | 1492 |

bins_df[0]

cut

(-inf, 36.0] 14426

(36.0, 52.0] 34033

(52.0, 61.0] 19977

(61.0, 73.0] 18661

(73.0, inf] 7998

Name: 0, dtype: int64

bins_df["woe"] = np.log(

(bins_df[0] / bins_df[0].sum()) / (bins_df[1] / bins_df[1].sum())

)

bins_df

| SeriousDlqin2yrs | 0 | 1 | woe |

|---|---|---|---|

| cut | |||

| (-inf, 36.0] | 14426 | 24275 | -0.523395 |

| (36.0, 52.0] | 34033 | 45460 | -0.292483 |

| (52.0, 61.0] | 19977 | 16738 | 0.173920 |

| (61.0, 73.0] | 18661 | 6847 | 0.999645 |

| (73.0, inf] | 7998 | 1492 | 1.676094 |

def get_woe(df, col, y, bins):

df = df[[col, y]].copy()

df["cut"] = pd.cut(df[col], bins)

bins_df = df.groupby("cut")[y].value_counts().unstack()

woe = bins_df["woe"] = np.log(

(bins_df[0] / bins_df[0].sum()) / (bins_df[1] / bins_df[1].sum())

)

return woe

# 将所有特征的WOE存储到字典当中

woeall = {}

for col in bins_of_col:

woeall[col] = get_woe(model_data, col, "SeriousDlqin2yrs", bins_of_col[col])

woeall

{'RevolvingUtilizationOfUnsecuredLines': cut

(-inf, 0.102] 2.198159

(0.102, 0.225] 0.822671

(0.225, 0.554] -0.108763

(0.554, 0.983] -1.156168

(0.983, 1.0] -0.483896

(1.0, inf] -2.063574

dtype: float64,

'age': cut

(-inf, 36.0] -0.523395

(36.0, 52.0] -0.292483

(52.0, 61.0] 0.173920

(61.0, 73.0] 0.999645

(73.0, inf] 1.676094

dtype: float64,

'DebtRatio': cut

(-inf, 0.0175] 1.534399

(0.0175, 0.49] 0.011228

(0.49, 837.0] -0.333937

(837.0, inf] 0.288512

dtype: float64,

'MonthlyIncome': cut

(-inf, 0.09] 0.782868

(0.09, 6238.526] -0.200869

(6238.526, inf] 0.310738

dtype: float64,

'NumberOfOpenCreditLinesAndLoans': cut

(-inf, 1.0] -0.919013

(1.0, 3.0] -0.362054

(3.0, 5.0] -0.067317

(5.0, 17.0] 0.134481

(17.0, inf] 0.483913

dtype: float64,

'NumberOfTime30-59DaysPastDueNotWorse': cut

(-inf, 0.0] 0.351043

(0.0, 1.0] -0.868353

(1.0, 2.0] -1.363561

(2.0, inf] -1.482353

dtype: float64,

'NumberOfTimes90DaysLate': cut

(-inf, 0.0] 0.235054

(0.0, 1.0] -1.737703

(1.0, 2.0] -2.281241

(2.0, inf] -2.377136

dtype: float64,

'NumberRealEstateLoansOrLines': cut

(-inf, 0.0] -0.408144

(0.0, 1.0] 0.195483

(1.0, 2.0] 0.650412

(2.0, 4.0] 0.372983

(4.0, inf] -0.297756

dtype: float64,

'NumberOfTime60-89DaysPastDueNotWorse': cut

(-inf, 0.0] 0.124264

(0.0, 1.0] -1.375012

(1.0, 2.0] -1.807985

(2.0, inf] -1.792286

dtype: float64,

'NumberOfDependents': cut

(-inf, 0.0] 0.636441

(0.0, 1.0] -0.586082

(1.0, 2.0] -0.510146

(2.0, inf] -0.451625

dtype: float64}

建模与模型验证

# 不希望覆盖掉原本的数据,创建一个新的DataFrame,索引和原始数据model_data一模一样

model_woe = pd.DataFrame(index=model_data.index)

# 将原数据分箱后,按箱的结果把WOE结构用map函数映射到数据中

model_woe["age"] = pd.cut(model_data["age"], bins_of_col["age"]).map(woeall["age"])

model_woe

| age | |

|---|---|

| 0 | 0.173920 |

| 1 | 0.999645 |

| 2 | -0.292483 |

| 3 | 0.173920 |

| 4 | -0.292483 |

| ... | ... |

| 189902 | 0.173920 |

| 189903 | -0.292483 |

| 189904 | -0.523395 |

| 189905 | 0.999645 |

| 189906 | -0.523395 |

189907 rows × 1 columns

# 对所有特征操作可以写成:

for col in bins_of_col:

model_woe[col] = pd.cut(model_data[col], bins_of_col[col]).map(woeall[col])

# 将标签补充到数据中

model_woe["SeriousDlqin2yrs"] = model_data["SeriousDlqin2yrs"]

vali_woe = pd.DataFrame(index=model_data.index)

for col in bins_of_col:

vali_woe[col] = pd.cut(model_data[col], bins_of_col[col]).map(woeall[col])

vali_woe["SeriousDlqin2yrs"] = model_data["SeriousDlqin2yrs"]

vali_X = vali_woe.iloc[:, :-1]

vali_y = vali_woe.iloc[:, -1]

x = model_woe.iloc[:, :-1]

y = model_woe.iloc[:, -1]

x.columns

Index(['age', 'RevolvingUtilizationOfUnsecuredLines', 'DebtRatio',

'MonthlyIncome', 'NumberOfOpenCreditLinesAndLoans',

'NumberOfTime30-59DaysPastDueNotWorse', 'NumberOfTimes90DaysLate',

'NumberRealEstateLoansOrLines', 'NumberOfTime60-89DaysPastDueNotWorse',

'NumberOfDependents'],

dtype='object')

age = vali_X.pop("age")

age

/Users/chenhao/anaconda3/envs/machine_learn/lib/python3.10/site-packages/IPython/lib/pretty.py:778: FutureWarning: Index.ravel returning ndarray is deprecated; in a future version this will return a view on self.

output = repr(obj)

0 0.173920

1 0.999645

2 -0.292483

3 0.173920

4 -0.292483

...

189902 0.173920

189903 -0.292483

189904 -0.523395

189905 0.999645

189906 -0.523395

Name: age, Length: 189907, dtype: category

Categories (5, float64): [-0.523395 < -0.292483 < 0.173920 < 0.999645 < 1.676094]

vali_X.insert(0, column="age", value=age)

vali_X.columns

Index(['age', 'RevolvingUtilizationOfUnsecuredLines', 'DebtRatio',

'MonthlyIncome', 'NumberOfOpenCreditLinesAndLoans',

'NumberOfTime30-59DaysPastDueNotWorse', 'NumberOfTimes90DaysLate',

'NumberRealEstateLoansOrLines', 'NumberOfTime60-89DaysPastDueNotWorse',

'NumberOfDependents'],

dtype='object')

from sklearn.linear_model import LogisticRegression as LR

lr = LR().fit(x, y)

lr.score(vali_X, vali_y)

0.7838626274966167

from sklearn.model_selection import cross_val_score

c_1 = np.linspace(0.01, 1, 20)

c_2 = np.linspace(0.01, 0.2, 20)

score_list_1 = []

score_list_2 = []

for i in range(20):

lr1 = LR(penalty="l2", solver="liblinear", C=c_1[i])

lr1.fit(x, y)

score_list_1.append(cross_val_score(lr1, vali_X, vali_y, cv=10).mean())

lr2 = LR(penalty="l2", solver="liblinear", C=c_2[i])

lr2.fit(x, y)

score_list_2.append(cross_val_score(lr2, vali_X, vali_y, cv=10).mean())

plt.figure(figsize=[20, 5])

plt.plot(range(20), score_list_1, label="c_1")

plt.plot(range(20), score_list_2, label="c_2")

plt.legend()

plt.show()

import scikitplot as skplt

vali_proba_df = pd.DataFrame(lr.predict_proba(vali_X))

skplt.metrics.plot_roc(

vali_y, vali_proba_df, plot_micro=False, figsize=(6, 6), plot_macro=False

)

<AxesSubplot: title={'center': 'ROC Curves'}, xlabel='False Positive Rate', ylabel='True Positive Rate'>

制作评分卡

B = 20 / np.log(2)

A = 600 + B * np.log(1 / 60)

B, A

(28.85390081777927, 481.8621880878296)

base_score = A - B * lr.intercept_

base_score

score_age = woeall["age"] * (-B * lr.coef_[0][0])

score_age

cut

(-inf, 36.0] -4.180160

(36.0, 52.0] -2.335948

(52.0, 61.0] 1.389030

(61.0, 73.0] 7.983784

(73.0, inf] 13.386325

dtype: float64

file = "./ScoreData.csv"

# 首先写入基准分数

# 之后使用循环,每次生成一组score_age类似的分档和分数,不断写入文件之中

with open(file, "w") as fdata:

fdata.write("base_score,{}\n".format(base_score))

for i, col in enumerate(x.columns):

score = woeall[col] * (-B * lr.coef_[0][i])

score.name = "Score"

score.index.name = col

score.to_csv(file, header=True, mode="a")

![[译]深入了解现代web浏览器(三)](https://img2022.cnblogs.com/blog/841228/202207/841228-20220708181749217-2023625180.avif)