一般情况下,AE的输入维度=输出维度,但是也可以不相等。

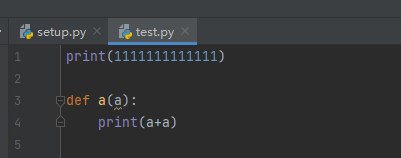

放一个基础的写法:

# 假设输入维度是 784,输出维度是20,中间维度是3

in_size = 784

out_size = 20

#hyper params

EPOCH = 500

LR = 0.005 # learning rate

class AutoEncoder(nn.Module):

def __init__(self):

super(AutoEncoder, self).__init__()

self.encoder = nn.Sequential(

nn.Linear(in_size, 256),

nn.ReLU(),

nn.Linear(256, 128),

nn.ReLU(),

nn.Linear(128, 64),

nn.ReLU(),

nn.Linear(64, 3), # compress to 3 features which can be visualized in plt

)

self.decoder = nn.Sequential(

nn.Linear(3, 128),

nn.ReLU(),

nn.Linear(128, out_size),

)

def forward(self, x):

encoded = self.encoder(x)

decoded = self.decoder(encoded)

return encoded, decoded

autoencoder = AutoEncoder()

optimizer = torch.optim.Adam(autoencoder.parameters(), lr=LR)

loss_func = nn.MSELoss()

for epoch in range(EPOCH):

encoded, decoded = autoencoder(AE_input)

loss = loss_func(decoded, AE_output) # mean square error

optimizer.zero_grad() # clear gradients for this training step

loss.backward() # backpropagation, compute gradients

optimizer.step() # apply gradients

print(loss)

# 查看中间层低维的输出

with torch.no_grad():

hideen_z, _ = autoencoder(AE_input)

hideen_z = np.array(hideen_z)